It is time to turn our attention to modern-day system design, which would be incomplete without a distributed Machine Learning (ML) serving system. ML models are used in several modern-day systems. For serving inferences from these models, there is usually a dedicated system that exposes a microservice upon which all other services rely. In this insight, we will explore the design of one such serving system for the Transformer model - a cornerstone ML model in Generative Artificial Intelligence.

This paper was presented at USENIX Operating System Design and Implementation (OSDI) '22 by Seoul National University and offers an excellent introduction to modern ML serving systems.

In this insight, we will start by discussing what hardware accelerators are and how they are becoming crucial in modern computing. Then, we will explore GPUs - the most popular hardware accelerators today - in great detail, discussing their specific architectures and specifications. Next, we will perform a deep dive into Transformer models. We will also touch upon pipelined parallelism, a concept found in distributed parallel data processing and compiler design. Finally, we will examine Orca to see its specific strategies, design, architecture, and pipelining used for optimizing Transformer serving.

1. Hardware Accelerators

- Graphics Processing Units (GPUs): Originally designed for Single Instruction, Multiple Data (SIMD) processing, making them ideal for image manipulation. More recently, GPUs have become crucial for training and inference in AI and machine learning (ML) applications.

- Field-Programmable Gate Arrays (FPGAs): These integrated circuits offer post-manufacturing reconfigurability. They consist of a grid of reconfigurable logic blocks and programmable interconnects, enabling users to implement custom digital circuits tailored to specific applications.

- Cryptographic Accelerators: Co-processors specifically engineered to handle computationally demanding cryptographic operations.

2. Graphics Processing Unit (GPUs)

GPUs were initially designed for rendering graphics. However, they evolved into powerful parallel processors. CPUs typically use a Single Instruction, Single Data (SISD) approach, whereas GPUs excel at SIMD computations. This means they can apply the same operation to many data points simultaneously, which is ideal for tasks like image processing, where identical computations are performed on numerous pixels.

2.1. The Rise of General-Purpose GPUs

A significant shift occurred in 2007 with the introduction of NVIDIA's TESLA architecture. This marked the advent of the first General-Purpose GPUs (GPGPUs), specifically designed for non-graphics computations. Unlike consumer-oriented GeForce cards, TESLA GPUs were built for high-performance SIMD operations. This innovation paved the way for subsequent generations of powerful GPU architectures like the V100 (2017), A100 (2020), and H100 (2022), which are now widely used in data centers and for scientific computing. One can program TESLA and later GPUs using CUDA.

2.2. Architecture

A GPU's power comes from its highly parallel architecture, as shown in illustration 1. GPUs are composed of many Streaming Multiprocessors (SMs), often referred to as cores. For example, a V100 GPU has 80 SMs. Each SM, in turn, contains multiple subcores. A V100, for instance, has four subcores per core, as shown in illustration 2.

Each subcore is equipped with specialized units to handle various computations, as shown in illustration 3:

- Fetch/decode units for instructions.

- Arithmetic units for integer and floating-point operations.

- Tensor units for matrix computations, crucial for deep learning.

- Load/store units for memory access.

- A set of warps, where a warp is a collection of registers.

2.3. Memory Model

GPUs feature an on-chip memory hierarchy similar to a Non-Uniform Memory Access (NUMA) architecture, with three levels:

- Global memory: Accessible by all subcores.

- Shared memory: Accessible only within a specific SM (core).

- Local memory: Accessible only by a single thread.

2.4. CUDA

CUDA (Compute Unified Device Architecture) is a programming language used to develop GPU kernels. A kernel is a routine specifically compiled to run on accelerators like GPUs.

Consider a simple loop like

for (int i = 0; i < n; i++)

a[i] = 2 * a[i];

The body of the loop is a prime example of a kernel, as it involves the same operation applied to multiple data points.

When a program runs, it typically starts on the CPU. To utilize the GPU, the CPU first copies the necessary data to the GPU's global memory. Then, it initiates the creation of threads on the GPU.

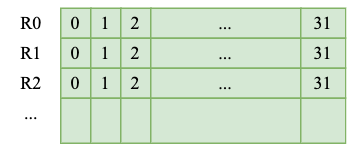

For very large datasets (e.g., billions of data items), the data is divided into blocks. Each block is mapped to an SM core in a round-robin fashion, with the context stored in warp registers. This allows for efficient distribution of work across the numerous parallel processing units of the GPU. While the entire dataset resides in global memory, individual threads can copy portions to their respective shared or local memory for faster access during computation.

2.5. Synchronization Primitives

To manage parallel execution and data consistency, CUDA provides synchronization primitives:

- Barriers: Used to synchronize threads within a thread block. For instance, one thread might copy data from global to SM memory, and the other threads in that block will wait until the copy is complete.

- Atomics: Used to synchronize access to shared and global variables, ensuring that concurrent writes or reads don't lead to data corruption.

2.6. Specifications

Table 1: Specifications of popular GPUs.

2.7. GPU ↔ GPU Communication

3. ML Serving

Once a ML model is trained, the real work of ML serving (or model serving) begins. ML serving is an infrastructure challenge focused on taking that trained model, packaging it up, and deploying it as an API. The goal is to make ML models readily available to generate predictions at scale.

Every ML serving system operates with two distinct layers:

- The Serving Layer (Frontend): Responsible for receiving incoming prediction requests from other systems and then handling the responses back to them.

- The Execution Layer (Backend): Stores the actual ML model and runs the inputs through it to generate the output, which are then passed back to the serving layer.

For ML models like Transformers (a widely used modern architecture), specific systems have emerged to handle each layer. For the serving layer, popular choices include Triton and TensorFlow Serving. For execution layer, FasterTransformer and LightSeq are frequently used.

4. Transformers

- I'm not an ML expert, and my knowledge of Transformers is limited to what's essential for grasping the underlying training and serving system architectures. This section will offer a concise overview of only the most crucial aspects.

- There are many variations of Transformers used for a variety of applications like language processing, image processing, etc. Here we are going to explore a simple transformer for language processing only.

- The section assumes that readers have a basic understanding of neural networks.

4.1. The Attention Layer

- Query (Q): What is being looked at? In the context of text processing, this represents the current token that the attention mechanism is focusing on.

- Keys (K): What are the elements to pay attention to? These are all the tokens in the sequence that the query might attend to.

- Values (V): What are values associated with the keys?

4..1.1. The Mathematics Behind Attention

- Query (Q): A 1 x H dimensional vector (or matrix). This is essentially the input to the attention layer, representing the token whose relationships with others we're trying to understand.

- Key (K): An L x H dimensional matrix, where L is the total number of tokens.

- Value (V): Another L x H dimensional matrix.

- QKT: This matrix multiplication results in a 1 x L dimensional matrix. This matrix quantifies how much "attention" the query should pay to each of the M tokens.

- softmax(...): Applying the softmax function to this 1 x L matrix normalizes these attention scores, ensuring they sum up to 1.

- softmax(QKT)V: Finally, multiplying these normalized attention scores by the value matrix (V) yields a 1 x H dimensional matrix (or a vector). This resulting vector is the output of the attention layer for the given input query.

4.2. Attention Layer for Autoregressive Models

- Query (Q): This is the embedding of the last token generated, serving as the context for predicting the next one.

- Keys (K): These are the keys for all tokens that precede the token currently being predicted.

- Values (V): These are the values for all tokens that precede the token currently being predicted.

4.2.1. Example Walkthrough

- Iteration 1:

- Query: "this"

- Context: "I think this"

- Output: "is"

- Iteration 2:

- Query: "is"

- Context: "I think this is"

- Output: "great"

- Iteration 3:

- Query: "great"

- Context: "I think this is great"

- Output: <EOS> (End of Sequence token)

4.3. Batching

- Query (Q): A B × H dimensional matrix.

- Key (K): A B × L × H dimensional matrix.

- Value (V): A B × L × H dimensional matrix.

5. Pipelined Parallelism

6. Orca

6.1. Design

6.2. Limitations of Existing Transformer Serving Systems

- Batch Bottleneck: The performance of an entire batch is bottlenecked by the slowest or longest-running request within it. If one request requires generating significantly more tokens than others (e.g., 100 times more), all other requests in that batch will be delayed until the longest one completes.

- Forced Padding: To create uniform batches, all requests are typically forced to have an equal number of tokens. Existing systems achieve this by padding shorter contexts to match the length of the longest context in the batch, leading to wasted computation and memory.

6.2.1. Solution 1: Iteration-Level Scheduling

6.2.1.1. Request Selection for Scheduling

- Maximum Batch Size: This is primarily constrained by the GPU's computational capacity, which depends on its core and subcore count.

- Available GPU Memory: The most variable element of memory consumption is the KV cache stored in the Attention Manager. Model parameters, code, and other static elements consume a constant amount of memory. Each request has an upper bound on the maximum number of tokens it can generate, which in turn sets an upper bound on its memory footprint within the Attention Manager.

- Randomly picks a request from the pool until the batch reaches its maximum size (Lines 21-23).

- Selects the requests for processing if their maximum token slots in the Attention Manager does not exceed the total available Attention Manager slots (Lines 24-26).

- Once selected, these requests are processed through one iteration of the transformer. After the iteration completes, the newly generated token for each request is appended to it. Requests marked as finished (Line 13-14) are removed from the batch, and their reserved slots in the Attention Manager are freed, allowing new requests to be added.

6.2.2. Solution 2: Selective Batching

6.3. Architecture

6.3.1. Partitioning

- Inter-layer split: This approach distributes different layers of the model across various GPUs. The output of one layer on one GPU is then communicated as input to the next layer on another GPU.

- Intra-layer split: This method involves splitting within a single layer. ML models are fundamentally composed of weight matrices and bias vectors. These often share a common dimension, for instance H, representing the hidden state size or embedding vector length. Intra-layer splitting divides these weights and biases along this H dimension. For example, a key matrix Wk with dimensions (1, H) could be split into (1, H1), (1, H2), and (1, H3), where H1 + H2 + H3 = H. Similarly, the input query vector is also split. Essentially, this approach distributes the computation of embedding features.

Figure 6: Copy of figure 6 from the paper.

6.3.2. The Request Execution Flow

6.3.3. Communication

6.4. Pipelined Parallelism

6.4.1. Pipelining in Orca

6.4.2. Pipelining in FasterTransformer

- It doesn't fundamentally solve the problem of latency. The execution engine's output still waits for all requests across all microbatches to complete, due to its request-level scheduling.

- It can negatively impact latency. Each batch effectively has to pass through more stages. If there are N microbatches, this can add up to N - 1 additional stages of sequential processing for the entire batch to complete.

7. Evaluation

7.1. Microbenchmarks

- Orca performed slightly worse compared to FasterTransformer. This was mostly because Orca doesn't use batching for Attention layers, which increases the overhead.

- However, as the model size increases, which requires its deployment across multiple GPUs and hence also necessitates cross-CPU communication, Orca outperforms FasterTransformer by 47% (175B model, 16 GPUs). This is primarily because of Orca's use of NCCL for cross-GPU communication, reserving it for data only. Control messages are on a separate (gRPC) channel.

7.2. Synthetic Requests

- The per-request latency increased as the model size increased due to increased communication across GPUs.

- Due to miscellaneous overheads, the per-request latency also increased as the throughput increased up to a point, after which the latency shot up too high.

- Orca offered much higher throughput support than FasterTransformer. In both the 175B and 341B models, Orca supported at least 2 req/s without latency significantly shooting up.

- With the 101B model and very low throughput, FasterTransformer performed better than Orca in terms of latency. This was because FasterTransformer used batching in Attention layers.

7.3. Effect of Batch Size

As batch size increased, Orca offered higher throughput. The per-request latency remained constant across different batch sizes. The tipping point for each batch size was at different throughput levels. With a batch size of 32, 6 req/s throughput was achievable.As batch size increased, FasterTransformer also provided better throughput. Microbatching was not very useful; in fact, maximum throughput was achieved by setting microbatch sizes equal to the batch size. Increasing batch size can help, but this also increases the likelihood of including a request that has significantly more tokens, thereby slowing down the entire pipeline.

Comments

Post a Comment