We have explored several databases up until now, most of which have been NoSQL. We also discussed one SQL database that is not truly distributed, as its transactions are executed on a single machine and the logs are subsequently replicated. Now, we will take a look at a database that is truly distributed, where transactions run in a distributed fashion.

This landmark paper, presented at Operating System Design and Implementation (OSDI) '12, has become one of Google's most significant contributions to distributed computing. It introduced TrueTime that revolutionized system assumptions. Authored by J.C. Corbett, and with contributions from pioneers like Jeff Dean and Sanjay Ghemawat, it represents the leading edge of the field.

In this insight, we will first revisit the different types of databases. Then, we will review the CAP theorem, a well-known result in computer science that is widely understood in the industry. Next, we will dive deep into SQL databases, specifically discussing isolation properties. We will then cover serializability and understand how it can be achieved in distributed SQL databases. Following that, we will introduce Google’s TrueTime, the mammoth system that serves as the backbone for solving serializability in distributed SQL databases. Then, we will examine Spanner and understand the intricacies of the system, running through examples to see how transactions work. Lastly, we will revisit the CAP theorem to understand how Spanner and TrueTime fit within its framework.

Recommended Reads:

- Paper Insights - Practical Uses of Synchronized Clocks in Distributed Systems where we discussed why clock synchronization is necessary but not sufficient for external consistency.

- Paper Insights - A New Presumed Commit Optimization for Two Phase Commit where two-phase commits (2PC) was introduced and how it is implemented in a distributed system.

- Paper Insights - Amazon Aurora: Design Considerations for High Throughput Cloud-Native Relational Databases where Amazon Aurora was introduced that builds a (not quite) distributed SQL database without using 2PC.

1. Databases

Databases generally fall into two categories:

- SQL Databases: These adhere to ACID (Atomicity, Consistency, Isolation, Durability) properties to ensure reliable transactions.

- NoSQL Databases: These often follow BASE (Basically Available, Soft state, Eventually consistent) principles, prioritizing availability and scalability over strict consistency.

1.1. SQL Databases

SQL databases guarantee ACID properties, ensuring reliable data transactions:

- Atomicity: Transactions are treated as a single, indivisible unit of work. Either all changes within a transaction are applied, or none are.

- Consistency: A transaction maintains the integrity of the database by ensuring that all defined rules and constraints (like keys, values, and other constraints) are preserved.

- Isolation: Transactions are executed independently of each other. One transaction's operations won't interfere with another's.

- Durability: Once a transaction is committed, the changes are permanent and will survive even system failures.

Examples of popular SQL databases include MySQL, Microsoft SQL Server, Google Spanner (a distributed database), and Amazon Aurora (also a distributed database).

1.2. NoSQL Databases

NoSQL databases often prioritize availability and scalability over strict consistency, adhering to what's sometimes described as BASE principles:

- Basically Available: NoSQL databases often favor availability over strong consistency. This means the system remains operational even if some data is temporarily inconsistent. Conflicts arising from concurrent operations are typically resolved later.

- Soft State: The database's state isn't guaranteed to be consistent at any given moment. It's a "soft" state, meaning its accuracy is probabilistic.

- Eventually Consistent: The database strives to achieve consistency eventually. Given enough time, all updates will propagate throughout the system, and all nodes will see the same data.

Recommended Reads:

- Paper Insights - Cassandra - A Decentralized Structured Storage System

- Paper Insights - Dynamo: Amazon's Highly Available Key-value Store

2. The CAP Theorem

A distributed data store can only guarantee two out of the following three properties: Consistency, Availability, and Partition Tolerance.

This is shown in illustration 1. The CAP theorem leads to choices like:

- Strong Consistency vs. Eventual Consistency: How strictly do you need data to be consistent across all nodes?

- Availability: How important is it that the system remains operational and responsive, even during failures and partitions?

- Partition Tolerance: How well does the system handle network partitions?

ACID databases are categorized as CP (Consistent and Partition-tolerant). This means that in the event of a network partition, some shards of the database might become unavailable to maintain consistency. BASE databases, on the other hand, are AP (Available and Partition-tolerant). They prioritize availability during network partitions, even if it means temporarily sacrificing consistency.

Illustration 1: The CAP theorem.

Beyond CAP, other related theorems exist, such as:

- PACELC: This theorem expands on CAP, stating that if there's a partition, you must choose between Availability (A) and Consistency (C), else (when there's no partition), you still have a trade-off between Latency (L) and Consistency (C).

- Harvest-Yield: This is an older theorem that's less commonly discussed today.

3. Availability Numbers

System availability is often expressed using "nines". For instance:

- Three 9s: 99.9% availability

- Four 9s: 99.99% availability

- Five 9s: 99.999% availability

This percentage represents the portion of queries expected to be answered without a system failure (though the response might not be correct or consistent).

Typically, availability increases with the geographic scope of the system. Local replication might offer lower availability, while regional and global systems aim for higher availability. This is because larger, geographically distributed systems have more resources and are less susceptible to correlated failures that could affect a smaller, localized system. For example, a system might provide 99.9% availability within a single cluster, 99.99% within an availability zone, and 99.999% globally.

4. SQL Databases

As previously mentioned, SQL databases must guarantee ACID properties. Atomicity and Consistency are crucial, and these are often achieved through transaction isolation.

Consider a bank database and a transfer transaction that moves funds from account A to account B. This transaction must exhibit the following properties:

- Atomicity: The entire transfer must either complete successfully or fail entirely. The database (and any external systems) should never observe an intermediate state where the money has left account A but not yet arrived in account B.

- Consistency: The total balance across all accounts must remain constant after any transaction.

4.1. Transaction Isolation

- Locks: The first transaction could acquire an exclusive lock on account A after the write operation. This prevents other transactions from reading A's value until the first transaction commits. Locks are managed using a two-phase locking protocol (not to be confused with the two-phase commit protocol).

- Version Numbers: Data items A and B could have version numbers. Any write operation creates a new version. These new versions are only committed when the transaction commits. The transaction can only commit successfully if the version numbers of A and B haven't changed by another transaction in the meantime.

4.1.1. Levels of Isolation

SQL databases offer different levels of transaction isolation, each with varying degrees of consistency and performance:

-

Serializable: This is the highest isolation level, guaranteeing that the effect of concurrent transactions is equivalent to some serial execution order (also known as multi-data sequential consistency). This makes reasoning about transactions straightforward for users.

-

Repeatable Reads: Similar to Serializable, but allows other transactions to insert new data items. This means that while a transaction might see a consistent snapshot of existing data, it might see newly inserted data items.

-

Read Committed: Allows a transaction to read changes made by other committed transactions while it's in progress. While this might seem reasonable, it can lead to inconsistencies. Imagine two transactions: T1 transferring $50 from A to B, and another T2 reading the balances of A and B. A serializable read would return either {A: $100, B: $150} or {A: $50, B: $200}, depending on the order of execution. However, a read committed read could return {A: $100, B: $200}. This can occur if T2 reads A's balance ($100), then T1 commits, and then T2 reads B's balance ($200).

-

Read Uncommitted (Dirty Reads): The lowest isolation level, allowing a transaction to read uncommitted values from other transactions. This can lead to reading data modified by transaction that is later rolled back, resulting in inconsistencies.

The mechanisms used by databases to achieve serializability at high concurrency are complex, involving concepts like serializable schedules, conflict serializability, view serializability, and recoverability. For the sake of brevity, those details are omitted here.

4.2. Reads

In the context of database transactions, a "data item" refers to the smallest unit of data that can be locked and is guaranteed to be read and written atomically. Typically, a data item can be a row in a table.

SQL databases support various types of reads:

- Single Data Item Read: Retrieves only one data item.

- Multiple Data Item Read: Retrieves multiple data items.

A database read is considered consistent if the retrieved values reflect the state of the data items at some point in time.

For instance, if accounts A and B initially have balances of $100 and $150, respectively, and a transaction transfers $50 from A to B, a consistent read should return either {A: $100, B: $150} (before the transfer) or {A: $50, B: $200} (after the transfer).

4.2.1. Consistently Reading a Single Data Item

Reading a single data item consistently is straightforward. Regardless of when the query executes, it will retrieve a consistent value for that single item. This is supported by all isolation levels excluding read uncommitted.

4.2.2. Consistently Reading Multiple Data Items

5. Distributed SQL Databases

Distributed SQL databases are those where data is sharded across multiple nodes. Transaction execution within these systems is similar to how it works in standalone databases:

- Locking: The necessary data items are locked on each node involved in the transaction.

- Commit: The transaction is then committed. However, the commit process is more complex in a distributed setting, requiring a 2PC.

Node 1

Node 2

6. Serializability v/s Strict Serializability

Serializability ensures that the effects of all transactions are equivalent to some sequential execution order (also known as sequential consistency). Strict serializability (or external consistency) builds upon this by considering the temporal order of transactions.

A helpful analogy clarifies the distinction:

Imagine you successfully commit a transaction transferring money from your account to a friend's account. You then immediately call your friend to check their balance. If they don't see the transferred funds, the database violates strict serializability (external consistency).

However, the database could still maintain internal consistency (serializability). This means the final database state reflects some valid sequential order of transactions, even if it doesn't align with the real-time order of events. For example, if your friend later tries to withdraw $200, the success or failure of that withdrawal will depend on whether the database ordered their transaction after or before your transfer transaction, even if, in reality, your friend's request came after your transfer.

Another way to explain strict serializability is that the database's transaction ordering must respect the temporal semantics observed by external users. Formally, if transaction T2 is submitted by a client only after transaction T1 commits, then the database must order T2 after T1.

6.1. Strict Serializability in Standalone Databases

In serializable mode, SQL databases can guarantee serializability because all data resides within the database's boundaries. It's easy to implement locking mechanisms to ensure a transaction completes before the next one begins.

Even strict serializability is straightforward to achieve. Since all operations occur within the same process, the effect of a committed transaction is immediately visible to the next transaction.

6.2. Strict Serializability in Distributed Key-Value Systems

Data stores that operate on single data items (such as key-value stores, distributed file systems, and lock services like ZooKeeper) primarily rely on a distributed log to coordinate changes to the state of those items.

Recommended Read: Paper Insights - ZooKeeper: Wait-free coordination for Internet-scale systems

With single data items, strict serializability effectively becomes linearizability. The distributed log itself serves as the single source of truth for the ordering of transactions. Once a transaction is committed to the immutable distributed log, its effects become immediately visible to subsequent transactions.

All writes are linearizable because they must go through the leader. Even during leader changes, the new leader must catch up on all existing committed transactions in the distributed log before accepting new transactions. This ensures that the new leader's writes respect the prior ordering.

Linearizable reads are achieved by executing a read-only transaction at the leader. The leader guarantees that a transaction is acknowledged only after it's committed to the distributed log. Therefore, all read-only transactions through the leader see the latest state of the data store (because they are causally related to and ordered after previous transactions, as they go through the same leader).

It's important to note that relaxed reads (i.e., reads from a non-leader replica) might not be linearizable.

6.3. Strict Serializability in Aurora

Amazon Aurora achieves strict serializability because its transaction processing occurs within a single, non-distributed instance. This single instance handles all transactions and provides the latest committed value at any given point in time. This architecture is similar to a standalone database, making serializability, and even strict serializability, easy to implement.

However, as argued before, this architecture also means that Amazon Aurora isn't a truly distributed SQL database. All transactions must pass through a single primary instance, which can easily become a performance bottleneck.

6.4. Strict Serializability in Distributed SQL Database with 2PC

In a true distributed SQL database, data items are distributed across multiple participants, and these participants must coordinate commits using a protocol like 2PC. Each participant might itself be a Paxos group, composed of multiple nodes maintaining a distributed log for fault tolerance.

However, 2PC alone does not guarantee serializability. Consider the bank database with accounts A, B, and C on nodes 1, 2, and 3. A has $100, B has $150, and C has $0. Two transactions, T1 (transfer $50 from A to B) and T2 (transfer $200 from B to C), are submitted, with T2 following T1.

- Transaction T1 is initiated.

- Nodes 1 and 2 accept T1 and acknowledge the PREPARE message.

- Node 2 commits T1 and releases its locks before Node 1 commits.

- Transaction T2 is initiated.

- Nodes 2 and 3 accept T2. Critically, Node 2 now reflects the updated balance of B (due to the partial commit of T1).

- T2 commits on both Nodes 2 and 3.

The problem is that T1 is still uncommitted on Node 1 (and hence only partially committed) when T2 reads the updated balance of B on Node 2. This creates a read uncommitted isolation level, where T2 sees the effects of T1 before T1 is fully committed, violating serializability.

The solution to achieving both serializability and strict serializability in a distributed SQL database involves establishing a globally consistent and externally consistent ordering of transactions. This is accomplished by timestamping every transaction upon entry into the system. When a transaction is received by any cohort, it's guaranteed to receive a timestamp greater than all previous transaction timestamps. Furthermore, all transactions will be ordered according to these timestamps.

However, generating such timestamps isn't trivial. It demands tight clock synchronization across all participating nodes. This is where Google's TrueTime comes into play.

7. TrueTime

Google's TrueTime revolutionized the generation of globally ordered timestamps, representing a significant breakthrough in distributed systems and fundamentally altering common assumptions. This innovation is what made the Spanner paper a landmark contribution to the field.

As Lamport highlighted in another seminal paper, achieving perfect global clock synchronization across all machines is impossible. Clocks can drift (running fast or slow), become completely out of sync, or be nearly perfect but still insufficient for ensuring system correctness. Consequently, most distributed system algorithms avoid relying on clock synchronization for correctness. "Most" because some algorithms do use clock synchronization to simplify assumptions; without such simplifications, certain problems (like consensus) would be impossible to solve (see the FLP impossibility).

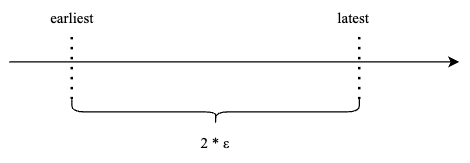

While clocks can drift, the error bound can be limited. When we use System.Now() API to read the time, it might return a time t that is ε away from the true global time. The actual time would lie within the interval [t - ε, t + ε]. TrueTime leverages this concept, but on a scale that makes the error bound ε extremely small at a global level.

7.1. API

- TT.now(): Returns a TTInterval object, representing a time interval: [earliest, latest].

- TT.after(t): Returns true if time t has definitely passed.

- TT.before(t): Returns true if time t has definitely not yet arrived.

Illustration 2: TrueTime error bound.

7.2. Architecture

Google has invested heavily in achieving and maintaining the tight error bounds required by TrueTime.

Recommended Read: Paper Insights - Practical Uses of Synchronized Clocks in Distributed Systems where internals of NTP were discussed.

TrueTime relies on GPS and atomic clocks as its time references. While GPS provides highly accurate time, it's susceptible to hardware failures and radio interference. Atomic clocks, while very stable, can also drift due to frequency errors. These potential sources of error contribute to the overall error bound.

Image 1: TrueTime setup in a data center.

Each data center has one or more time servers (or "time masters"). These masters fall into two categories:

- GPS Time Masters: Equipped with GPS receivers, these nodes receive time information directly from satellites.

- Armageddon Masters: Equipped with local atomic clocks, these nodes serve as a backup to GPS time masters in case satellite connections are unavailable.

Within each data center:

- There's at least one time master.

- Each machine runs a time slave daemon.

The masters synchronize their time references with each other. Armageddon masters advertise a slowly increasing time uncertainty, while GPS masters advertise a much smaller uncertainty (close to ±40ns).

The time slave daemons poll multiple masters to mitigate vulnerabilities. Each master provides a time interval, and these intervals must be merged to determine the largest common interval.

Marzullo's algorithm is used for this interval intersection. It's a classic algorithm for finding the largest intersection of multiple intervals, which is exactly what's needed here. Any intervals that don't intersect with the largest common interval are discarded.

7.3. Calculating ε

Each daemon advertises a slowly increasing clock uncertainty, derived from a conservative estimate of worst-case local clock drift.

The daemon polls time masters only every 30 seconds, assuming a maximum drift rate of 200 μs per second. This polling interval contributes approximately 6 ms to the uncertainty. An additional 1 ms is added to account for communication delays to the time masters. Thus, ε is typically between 1 and 7 ms.

With that understanding of TrueTime, let's now turn our attention to Google Spanner.

8. Spanner

Spanner was developed to replace Google's F1 and Megastore databases, which lacked both scalability and the characteristics of true distributed SQL databases. Spanner was designed to offer:

- Geographic replication for global availability.

- Massive scalability, handling tens of terabytes of data.

- External consistency.

When Spanner was created, no other distributed database offered external consistency (although the theoretical underpinnings were understood). Google assembled a team of scientists and engineers to make this groundbreaking system a reality.

8.1. The Architecture

8.1.1. Interleaved Tables

8.1.2. Span Servers

8.2. Paxos Groups and Transaction Manager

A tablet isn't stored on a single machine. It's replicated according to the directory's replication policy. The set of machines holding a particular tablet forms a Paxos state machine, responsible for that tablet's data. The set of replicas within a Paxos state machine is called a Paxos group.

Directories within a tablet can be moved using the movedir background task. The Paxos group logs a transaction after the move completes, so movedir doesn't block operations. The entire directory can be replicated to another group, and then ownership updated via a transaction.

8.2.1. Lock Table

The Paxos group's leader stores the lock table, which is used during transaction processing. The lock table maps key ranges to lock states. Implicitly, locks are held on a range of keys. The other Paxos group members participate in consensus and log transaction writes and commits.

8.2.2. Transactions within Paxos Group

When a transaction stays within a single Paxos group (i.e., all read/modified data items reside within that group), 2PC is not required. The Paxos group's distributed log and the leader's lock table are sufficient to manage the transaction because all replicas within a Paxos group have the same data.

Locks are acquired at the transaction's start, modifications are logged to Paxos, and then a COMMIT log entry is added.

8.2.3. Transactions Across Paxos Groups

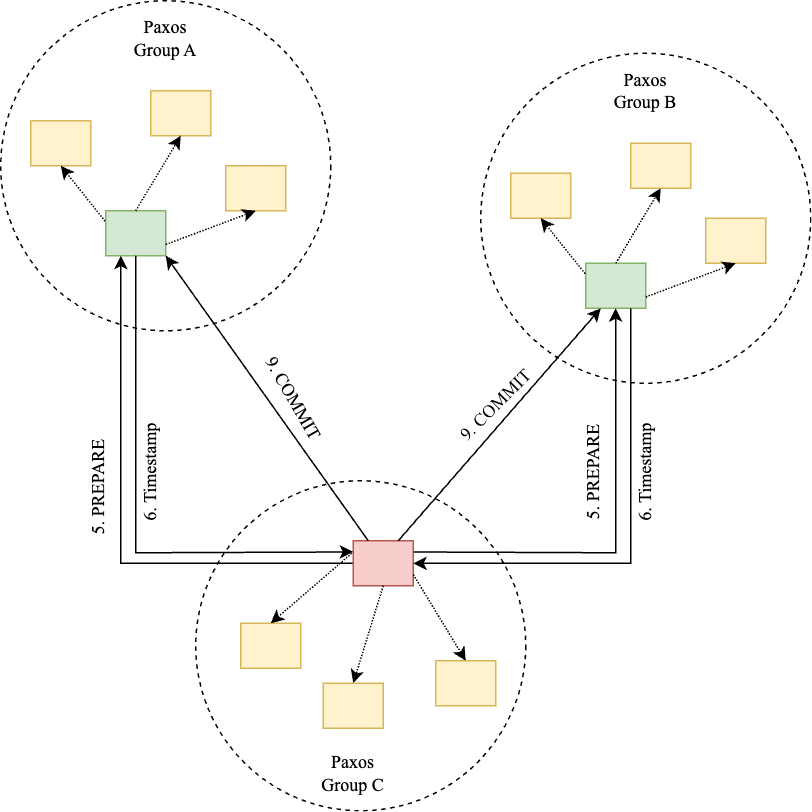

When a transaction spans multiple Paxos groups, 2PC is required. These Paxos state machines provide highly available cohorts for 2PC. (See consensus v/s 2PC). For 2PC, spanservers have a unit called transaction manager.

The leader of a Paxos group in 2PC is called the participant leader, while other replicas within the Paxos group are participant slaves. One of the Paxos group becomes the coordinator, and its leader becomes the coordinator leader. The leaders of the other Paxos groups involved in 2PC become coordinator slaves. The setup is shown in illustration 3.

Illustration 3: Paxos groups for 2PC.

8.2.4. Paxos Read-Only Transactions

Within a Paxos group, all read-only transactions must go through the leader for serializability. The leader ensures the transaction is ordered after all existing transactions. The leader also requires a quorum from a majority of replicas before a read is considered successful (e.g., in case of a view change). This overhead can be avoided by executing reads only at the Paxos leader, but this requires strong clock synchronization. Spanner uses TrueTime to make this possible.

To understand more on how clock synchronization helps, refer to the previous post, which explains how Harp relies on reading from the primary. This process requires clock synchronization to maintain external consistency.

8.3. Witness Nodes

8.4. Transaction Processing

As mentioned earlier, implementing 2PC requires globally consistent, monotonically increasing timestamps for transactions. This is where TrueTime becomes essential. Let's examine how Spanner assigns timestamps to transactions.

Spanner supports two main transaction types:

- Read-write (R-W) transactions: These are the standard transactions that use locks (pessimistic concurrency control).

- Read-only (R-O) transactions: Transactions that don’t perform any modifications. Because transactions have globally consistent timestamps and the data store is versioned, read-only transactions can run lock-free. We'll explore how this works shortly.

Spanner also offers another type of read called a snapshot read with bounded staleness.

8.4.1. Paxos Leader Leases

8.4.2. Assigning Timestamps

Consistent timestamp assignment during 2PC is crucial for Spanner's external consistency. Transactions arrive at a coordinator, which is also the Paxos leader. Because there's only one Paxos leader at any given time (leader disjointness), and that leader's timestamp assignment is constrained by its lease, the leader must assign timestamps greater than all previous transaction timestamps.

Spanner's external consistency guarantee is: If transaction T2 starts after transaction T1 commits, then T2's commit timestamp must be greater than T1's. This ensures that T2 is ordered after T1, maintaining external consistency. For example, if you execute T1 and then tell a friend, they can subsequently execute T2 and observe the effect of T1.

Formally, let eistart and eicommit be the start and commit events of transaction Ti, and si be the assigned timestamp. Then:

tabs(e1commit) < tabs(e2start) ⇒ s1 < s2

Spanner uses TrueTime to assign timestamps, ensuring that each timestamp is TT.now().latest. This makes it greater than any previously assigned timestamp. However, this alone isn't sufficient.

Commit Wait: After assigning a timestamp si, all 2PC participants wait until TT.after(si) is true. This guarantees that any participant invoking TT.now().latest for a subsequent transaction will receive a timestamp greater than si. Therefore, all newer transactions will have higher timestamps.

This proof is detailed in Section 4.1.2 of the paper. It relies on the assumption that clients only send a second transaction after observing the commit of the first.

This timestamping mechanism applies to both read-only and read-write transactions.

8.4.3. Executing a R-W Transaction

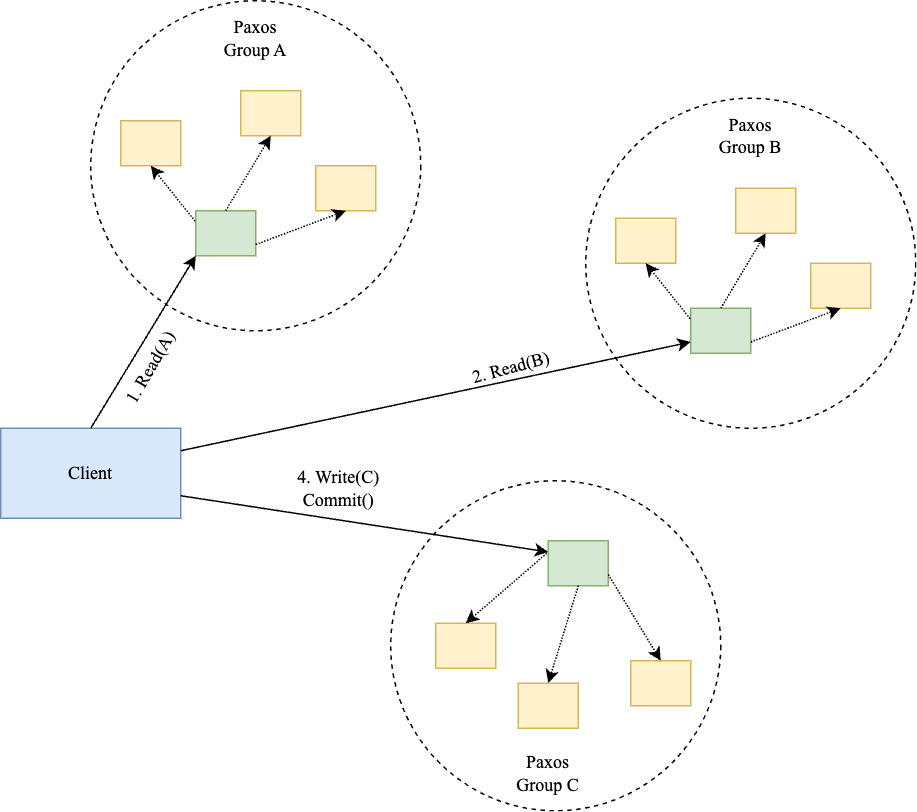

Illustration 4(a): Paxos groups in R-W transaction example.

Illustration 4(b): 2PC in R-W transaction example.

- txn.Read(A): The Paxos group leader for A returns the value of A and read-locks A to prevent concurrent modifications.

- txn.Read(B): The Paxos group leader for B read-locks B and returns its value.

- txn.Write(C, A + B): The client buffers the write to C locally. Spanner doesn't support read-your-writes (RYW) within a transaction, so writes can be buffered until commit.

- txn.Commit(): The client initiates the commit. Any of the Paxos groups (A, B, or C) can act as the coordinator. Let's assume the Paxos group for C becomes the coordinator. The Write(C) and Commit() requests are sent to C's Paxos group.

- Prepare Phase: C's Paxos leader locks C, logs the new value, and sends a PREPARE message to the leaders of A and B's Paxos groups (these are the cohorts in the 2PC).

- Ack: The leaders for A and B log the COMMIT message and acknowledge with their local TrueTime timestamps (TT.now().latest).

- Timestamp Assignment: The leader at C determines the maximum of all received timestamps (including its own) and assigns this as the transaction's commit timestamp. This timestamp is guaranteed to be greater than any timestamp assigned to a previous transaction affecting the same data items.

- Commit Wait: The leader at C waits until TT.after(s) is true, where s is the assigned commit timestamp.

- Commit Phase: C sends the COMMIT message to A and B, releasing all locks. A and B also release their locks.

8.4.3.1. FAQs:

Q: What happens if two orthogonal transactions are submitted by two different clients at the same time?

Q: What if the leader of any Paxos group goes down during 2PC after PREPARE reply?

Q: What if, between lock(A) succeeding and lock(B) succeeding, B is modified by another transaction?

8.4.3.2. Deadlock Prevention

Because lock acquisition during individual transactions is unsynchronized, deadlocks are possible, as shown in illustration 5.

Illustration 5: Deadlock example.

Spanner implements transactions as sessions between clients and the leaders of the involved Paxos groups. Two deadlock prevention schemes are considered:

-

Wound-Wait: An older transaction forces a younger one to abort and release a lock. A younger transaction waits for an older one to complete. Since timestamps haven't been assigned yet at this stage, ordering is determined solely by transaction start time (may be provided by client).

Wait-Die: An older transaction waits for a younger one. A younger transaction requesting a lock from an older one is aborted.

8.4.4. Executing a R-O transaction

- tsafePaxos: The highest timestamp of a transaction committed within the Paxos group.

- tsafeTM: This accounts for pending transactions. If there are no pending transactions, tsafeTM is infinite, meaning the replica is safe to read from as long as tsafePaxos is greater than t. If there are pending transactions, tsafeTM is calculated as:

8.4.4.1. Executing Reads at a given timestamp

8.5. Schema Changes

8.6. Evaluation

- In experiments with a single replica per Paxos group, commit wait time was approximately 5 ms, and Paxos latency was about 9 ms. As the number of replicas increased, latency remained relatively constant, but with a lower standard deviation.

- Terminating Paxos leaders resulted in a 10-second wait for a new leader to be elected. The Spanner paper emphasizes the importance of Paxos leader stability for higher availability. Shorter lease times could help in this scenario. In the event of leader failure, restarting the leader is generally preferred.

- For TrueTime, the error bound ε is roughly less than 4 ms (p99) and 10 ms (p999).

Google's F1 database, which was based on master-slave replicated MySQL, was replaced by Spanner. F1 maintains a history of all changes made to Spanner, which is itself written back to Spanner (change history).

The paper provides further internal details on how directories are also fragmented.

9. Spanner v/s CAP Theorem

Let's revisit the CAP theorem and examine how Spanner fits within its constraints. The information in this section is derived from Eric Brewer's paper.

Spanner, with its highly available Paxos groups for transaction management, might appear to violate the CAP theorem. However, it doesn't. Spanner prioritizes consistency. Its high availability is achieved at a significant maintenance cost borne by Google. Technically, it's still classified as a CP system. Spanner offers five 9s of availability.

9.1. Network Partitions

Network partitions, which can lead to availability loss, account for a relatively small percentage (8%) of Spanner's outages. Common network issues include:

- Individual data centers becoming disconnected.

- Misconfigured bandwidth.

- Network hardware failures.

- One-way traffic failures.

Google's extensive experience in network engineering allows them to build a highly reliable network. Spanner's network leverages Google-owned infrastructure, including the software-defined B4 network. Google's control over packet flow and routing enhances its resilience against network partitions.

9.2. Handling Crashes

- 2PC itself isn't crash-tolerant. If a participant fails, committing or aborting a transaction becomes challenging. This is an inherent limitation of 2PC. Spanner mitigates this somewhat by using Paxos groups for participants, ensuring high availability.

- Furthermore, during Paxos leader changes, a Spanner group essentially freezes until the lease expires. The paper suggests restarting the leader as a better solution than waiting for lease expiration.

9.3. Handling Network Partitions

2PC is not partition-tolerant; it cannot complete during a partition.

Even during partitions, Spanner transactions can proceed if the leader and a majority of a Paxos group remain on the same side of the partition.

Reads are generally more resilient. They can be performed at any replica that's caught up to the transaction's commit timestamp. Snapshot reads offer even greater robustness. However, if none of the replicas are sufficiently up-to-date (e.g., due to being on the non-majority side of a partition), reads will eventually fail.

10. TrueTime v/s CAP Theorem

Spanner's functionality heavily relies on TrueTime's accuracy and availability:

- Transaction timestamps: TrueTime provides the timestamps assigned to transactions.

- Paxos leader leases: Paxos group leader leases depend on TrueTime.

- Snapshot isolation: TrueTime's ability to generate consistent, ordered timestamps is fundamental to Spanner's versioning mechanism, which makes snapshot isolation possible. Without TrueTime, identifying data objects and ensuring a consistent snapshot (without partially applied updates) would be extremely difficult.

- Schema changes: TrueTime also facilitates schema changes.

Beyond Spanner, TrueTime has broader applications:

- Cross-system snapshots: TrueTime enables consistent snapshots across multiple independent systems without requiring 2PC. These systems can agree on a timestamp using TrueTime and then independently dump their state at that time.

- Workflow timestamps: TrueTime timestamps can be used as tokens passed through workflows (chains of events). Every system in the workflow can agree on the same timestamp.

TrueTime itself is not immune to the effects of network partitions. The underlying time sources (GPS receivers and atomic clocks) can drift apart, and as this drift increases, transactions may experience longer wait times before committing. Eventually, transactions may time out, impacting availability.

Comments

Post a Comment