In the CliqueMap paper, we explored how Remote Direct Memory Access (RDMA) serves as a backbone for an in-memory key-value store. In this paper, we will explore how RDMA can be leveraged to implement an algorithm for a more sophisticated problem: consensus. Consensus is a non-trivial problem, and achieving it in distributed systems is impossible and can only be done under certain assumptions. The algorithms that achieve consensus are often hard to understand - for example, Paxos. This paper takes the concepts of Paxos and implements them using RDMA to achieve microsecond-level consensus. This is very useful in applications demanding microsecond latency.

Presented at USENIX Operating System Design and Implementation (OSDI) '20, this influential paper from VMware Research and EPFL (Swiss) has since received significant attention within the distributed systems community. It was authored by Marcos Aguirela, a leading researcher at VMware.

In this insight, we will start with the latency requirements of modern microservices systems. We will also examine latency vs. throughput in detail and understand the trade-offs between them. Next, we will revisit RDMA - this time understanding hardware-based RDMA in greater detail. Finally, we will review what consensus is and see how Mu solves the consensus problem using a Paxos-like algorithm implemented using RDMA to achieve microsecond latency.

Paper Link

1. Microservices Latency

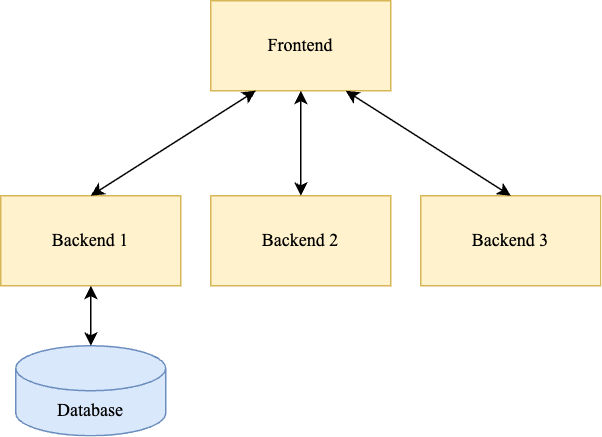

Almost all backend systems are composed of a multi-level microservices architecture, as shown in illustration 1.

Illustration 1: A microservices based system.

If 99% of requests to the backends are fast, the overall speed of the application will still be slow, as any single backend can introduce latency. For example, consider a frontend service that depends on five backend services, each with a good 99% latency. The probability that a random request will be fast is calculated as 0.995, which equals approximately 0.95 or 95%. This calculation still doesn't consider that the frontend service itself may run into issues. For example:

- CPU Scheduling: The thread processing responses from the backend services may not be scheduled on time by the CPU scheduler. Additionally, the usual Linux context switch time is 5 ms; even if the thread is scheduled on time, it may take a latency hit.

- Page Fault: The thread processing the response may run into a page fault and get descheduled by the operating system.

- Network Congestion: The network path used by the thread to communicate with backend services or clients might experience congestion.

To optimize the latency of the system, the application needs to optimize each backend to achieve four or five nines of availability, meaning that only when the p9999 or p99999 latency is low will the overall latency be good.

Jeff Dean has an excellent article on what it takes to optimize for tail latency in a cloud-like environment where thousands of jobs compete for resources. Researchers have explored various approaches to optimize the tail latency of applications, including:

- Steering interrupts to different CPU cores to improve processing efficiency.

- Implementing custom kernel scheduling policies, such as the ghost scheduler introduced by Google for Linux, to prioritize certain tasks.

- Using custom paging mechanisms, like huge pages, to reduce TLB misses and improve memory access times.

2. Latency v/s Throughput

Latency and throughput are two important measures of system performance. Latency defines the time it takes for a unit of work to be completed, and throughput defines the amount of work that can be done by the system per unit of time.

To understand this, consider a well-known analogy: a pipe through which water flows, as shown in illustration 2.

Illustration 2: Water pipe analogy.

Ignoring friction and viscosity, and imagining water flowing through the pipe at a constant speed, the latency of each water molecule would be the length of the pipe divided by the speed. The throughput of the pipe would be determined by its diameter. The larger the diameter, the more water can flow. Throughput is expressed as volume per unit of time; in terms of water, it could be gallons per second.

- Latency can impact throughput because if water flows faster, more water can pass through per unit of time.

- Conversely, throughput can also impact latency. If the diameter is small, less water can flow through, potentially causing a backlog or queue of water molecules waiting. This waiting time in the queue is part of the total latency.

Coming back to computer science, let's consider work items each requiring a fixed amount of CPU processing to complete. The latency of a work item would be the time it takes for its processing to finish after submission. If a work item requires 100 ms of processing and there is no queuing, all work items will take 100 ms to complete.

However, when a CPU is processing a work item, other submitted work items may be stalled. With one CPU, we achieve a throughput of 10 items/second. But not all work items will have the same latency; the worst-case latency (for the last work item in a sequence) can be 1 second. If, however, work items are submitted only after the previous one completes, there would be no queuing, and the latency would be uniformly 100 ms for all.

Adding more CPUs (assuming no communication overhead between them) can linearly increase throughput. With six CPUs, we could achieve a throughput of 60 items/second.

In both cases (the pipe example and the number of CPUs), there is a hard limit on the physical, tangible resources. The number of CPUs cannot be unbounded. This limit on physical resources imposes a maximum throughput beyond which work items will experience queuing no matter how fast or slow they are submitted, thereby impacting latency. In the example above, where work items take 100 ms of CPU time and there are 6 CPUs, the maximum supported throughput without impacting the 100 ms latency is 60 items per second (assuming work items are submitted sequentially). Beyond this throughput, queuing will occur, and latency will increase.

In an ideal system, latency remains constant as throughput increases up to a certain point. Beyond this point, latency begins to increase as work items experience queuing. In a real-world scenario, well-designed systems strive to approximate this ideal behavior, although some deviation is inevitable due to factors such as CPU scheduling delays and context switching. In contrast, poorly designed systems struggle to maintain throughput, and latency degrades rapidly as throughput increases. The throughput v/s latency for different systems is shown in illustration 3.

Illustration 3: Variation of latency with throughput for different systems.

3. Non-Uniform Memory Access

On a

Non-Uniform Memory Access (NUMA) machine socket (shown in illustration 4), multiple CPUs each possess local L1 and L2 caches, while sharing a common L3 cache. The caches are accessed for reads and writes before the memory.

Illustration 4: NUMA architecture.

Threads are executed on these CPUs. Typically, threads belonging to the same application are scheduled to run on a shared set of CPU cores to leverage data sharing within the caches, establishing thread affinity to a CPU. It is also possible to "pin" a thread to a specific CPU core, dedicating that core and eliminating scheduling latency for that thread. However, when multiple threads of an application execute on different CPUs that do not share L1/L2 caches, these lower-level caches can become inconsistent for the same memory location. Hardware is responsible for maintaining

cache coherence. This synchronization is often performed lazily, introducing a latency of approximately 400 ns on the access path, depending on the inter-CPU distance.

For a given memory location, read operations preferentially access the cache before resorting to RAM. Similarly, write operations are initially performed only on the cache (in write-back mode). Subsequently, these cache modifications are synchronized with RAM through two primary mechanisms:

- Memory Barriers: A specific instruction that enforces the synchronization of cache pages to RAM. Memory barriers are crucial for ordering memory operations and are therefore essential in the implementation of locking primitives.

- Cache Eviction: When a cache page is evicted to make space for new data, its contents, including any writes, are written back to RAM.

Almost all computers (servers and workstations) are NUMA machines.

4. Remote Direct Memory Access

As shown in illustration 5, in traditional networking stacks, when an application intends to retrieve data from a counterpart application operating on a remote machine, it initiates a Remote Procedure Call (RPC). This RPC execution requires a pathway through the operating system kernel and the NIC on the sending host. Subsequently, the data request traverses the NIC and the kernel of the receiving host. Following this kernel-level processing, the appropriate application thread on the receiving machine is invoked to access and read the requested data from its memory.

Illustration 5: Traditional networking stack.

In contrast, as shown in illustration 6, when employing RDMA, the NIC is granted direct access to specifically designated memory regions on the remote machine. This capability for remote direct access fundamentally eliminates the necessity for CPU intervention and, as a consequence, completely bypasses the involvement of both the operating system kernel and application threads in the data transfer process. The NIC itself possesses the inherent ability to directly read the required memory locations on the remote system and transmit the corresponding response back to the requesting application.

Illustration 6: RDMA-based networking stack.

Let's take a detailed look behind RDMA mechanics.

4.1. Memory Registration

Not all of the host's memory is available for remote access via RDMA. Only specific portions of the host's memory that is explicitly registered with the NIC can be accessed remotely, as shown in illustration 7. This registration process makes these designated memory regions accessible to the NIC's RDMA controller, which can then perform read and write operations directly on those memory locations without CPU intervention.

Illustration 7: Registered memory for RDMA.

The registered memory locations are usually "pinned", i.e., they are always resident in the memory and are never paged out.

4.2. Queue Pairs

A Queue Pair (QP) represents the fundamental communication endpoint of an RDMA connection, as shown in illustration 8. Any work request submitted to one end of a QP can be processed and received at the other end of the paired QP on the remote machine. Conceptually, a QP comprises two distinct queues: a Send Queue for initiating outgoing messages and a Receive Queue for handling incoming messages.

Illustration 8: Queue pairs.

Messages placed onto the Send Queue are essentially commands directed towards the NIC on the local host. The commands may also have pointers to the memory location where the payload can be read from.

The client's CPU encodes these commands and places them into the Send Queue of the QP. Subsequently, the host's NIC hardware takes over and executes these commands. The NIC communicates with the remote NIC to create a similar command on the Receive Queue. Finally, the payload is transferred.

4.3. RDMA Commands

NIC's RDMA controller supports certain primitive commands such as:

- Read: Retrieve data from a specified memory address on the remote host.

- Write: Transfer and store data to a designated memory address on the remote host.

- Atomics: Perform atomic memory operations like Compare-and-Swap (CAS) on a given memory address or Fetch-and-Increment a numerical value at a specific location.

Higher level commands can be built on top of these primitives.

4.4. RDMA Connection Types

RDMA supports several variations of connection semantics, offering different trade-offs in terms of reliability and connection establishment:

1. Reliable Connection (RC)

Prioritizes reliable data delivery. It leverages acknowledgements generated by the NIC hardware to ensure that commands are successfully received by the remote peer.

Furthermore, RC guarantees the ordering of message delivery. To achieve this reliability, the underlying hardware implements mechanisms for retransmissions (retries), acknowledgements, and sequence number tracking, all managed directly by the NIC.

RC in RDMA is the functional equivalent of TCP in traditional networking.

2. Unreliable Connection (UC)

In contrast to RC, Unreliable Connection does not provide guarantees for reliable delivery or ordering of messages.

3. Unreliable Datagram (UD)

A connectionless mode of communication, similar to UDP in traditional networking. No dedicated channel is established before communication. Unreliable Datagram (UD) offers lower overhead but lacks the reliability and ordering guarantees of RC.

4.5. Access Checks

To ensure memory protection and controlled access in RDMA environments, each registered memory region is associated with an access flag. This flag explicitly defines the permissible operations on that memory region, specifying whether it can be read from or written to remotely.

Similarly, each QP also carries an access flag that defines the allowed access privileges to the remote memory regions when operations are initiated through that specific QP.

Collectively, the access flags associated with both the memory regions and the Queue Pairs provide a robust mechanism for implementing various access control policies, regulating how clients can interact with the host's registered memory.

4.6. Memory Regions and NUMA Access

When a CPU writes a payload to a registered memory location and initiates an RDMA write request to a remote computer, the memory write is immediately synchronized with RAM using

memory barriers.

However, consider a scenario where a payload is received via RDMA, and the NIC writes it to memory. It's possible that a subsequent CPU read might only access the L1/L2 cache, potentially missing the newly written payload if the cache hasn't been updated.

There are two primary solutions to address this potential inconsistency:

- Designate the memory regions accessed by RDMA as uncacheable. This forces all CPU reads to fetch data directly from RAM.

- Leverage PCIe coherence mechanisms, such as CXL (Compute Express Link), which ensures that memory writes by I/O devices are synchronized with the CPU's cache lines.

5. Consensus

Consensus algorithms address the fundamental challenge of achieving agreement on a single value among distributed nodes. These algorithms must satisfy the following properties:

- Termination (Liveness): Every non-faulty node eventually decides on a value.

- Integrity (Safety): If all non-faulty nodes initially propose the same value, that value must be the decided value.

- Agreement (Safety): All non-faulty nodes agree on the same decided value.

There are no consensus protocols that can guarantee all these properties (

The FLP Impossibility). Paxos is one of the consensus algorithms that can guarantee safety, fault tolerance, and liveness to a high degree of probability.

One of the most critical applications of consensus is in generation of distributed logs for a replicated state machine (RSM). A distributed log is a sequence of ordered instructions that an RSM executes. Each log entry represents an instruction for the machines, ensuring that all nodes in the RSM execute these instructions in the same order.

For example, illustration 9 shows an RSM with a single variable X stored in it. The instructions for the state machines can be blind-writes (X := Y) or read-writes (IF v(X) = Y THEN X := Z):

Illustration 9: Distributed logs in RSM.

Note that different nodes of an RSM may be at different positions in the execution log but will finally converge to the same final state.

6. Microsecond Applications

Within the scope of this discussion, a pertinent question arises: is microsecond-level latency truly necessary? Human perception is generally limited to delays exceeding 10ms. However, the focus on microsecond-level latency is driven by several factors:

- As previously explained, latency exhibits an additive characteristic in layered microservices systems. Higher-level systems depend heavily on the performance of underlying components. Even small latencies at each stage accumulate, eventually resulting in an overall latency that becomes noticeable to humans.

- Furthermore, this paper highlights additional examples of systems where low latency is critical. These include certain key-value stores and high-frequency trading platforms (although achieving success in HFT often necessitates even finer-grained latency at the nanosecond level).

7. Mu

Mu is a microsecond consensus system that leverages RDMA as its fundamental communication mechanism to achieve high-performance agreement.

Figure 1: Copy of figure 1 from the paper.

Its architecture, as shown in figure 1, comprises two distinct planes:

- Replication Plane: Dedicated to the writing of distributed logs.

- Background Plane: Handles management tasks, including leader election.

All inter-node communication within Mu relies on RDMA. The primary communication flow is as follows:

- Clients utilize RDMA to directly write their messages into server memory. The RC RDMA connection guarantees message delivery.

- Servers periodically poll their memory to detect incoming messages and subsequently process them.

This RDMA-centric communication model applies uniformly to both the replication and background planes.

7.1. Roles

Each node in the Mu system operates in one of two roles: leader or follower.

7.2. RDMA Memory Regions

The RDMA memory space is partitioned into two dedicated memory regions: one for the replication plane and another for the background plane.

7.2.1. Permissions

- All nodes possess write permissions to each other's background plane memory region.

- However, write access to the replication plane memory region is exclusively granted to the current leader only.

7.2.2. Replication Plane Memory Region

This region contains the following data:

- Proposal Number: The next proposal number to be used, maintained only on the leader node.

- Logs: The ordered entries of the distributed log. To manage storage, logs are written in a circular buffer, with older entries being overwritten once all nodes have acknowledged reaching a specific log position.

7.2.3. Background Plane Memory Region

This region stores:

- Leader Details: Information about the current leader. Additionally, the leader maintains a heartbeat sequence for monitoring.

- Permission Details: Information regarding access rights within the system.

7.3. Replication Plane

Let's delve into the workings of Mu's replication plane, with the core logic detailed in Listing 2.

At its heart, the authors have implemented the Paxos algorithm utilizing RDMA. For those familiar with

Paxos, the implementation follows a straightforward pattern:

- A proposer (termed "leader" in the paper) aims to propose a log entry for a specific log position (slot) it perceives as empty. Acceptors (referred to as "followers") respond with either an acknowledgment (ACK) or any value currently occupying that slot.

- Subsequently, the proposer proposes a value for the slot. If all acceptors indicated an empty slot, the proposer's original value is proposed. Otherwise, the proposer must propose the value returned by the acceptors.

Important: If the proposer's initial value isn't accepted for a given slot, it retries with the next available slot position.

A key characteristic of Mu is that the acceptors' (i.e., followers') CPUs are not directly involved in the proposal and acceptance phases. Both these steps are executed entirely by the proposer (leader). Follower CPUs periodically fetch the committed logs and apply them to their state machines.

We will discuss leader election in the next section. For now, let's assume a leader has obtained write permission to the replication plane memory region from a majority of followers, termed confirmed followers. It's possible to have multiple nodes believing they are the leader, for instance, if one leader believes it has a majority while some followers have granted write permission to a new leader without the original leader's awareness.

7.3.1. Data Structures

Each node maintains a Log struct with the following fields:

- minProposal: The minimum Paxos proposal number seen by this node. A proposer can only issue new proposals with a number exceeding all known minProposal values.

- FUO (First Unoccupied): The index of the first log slot that does not yet contain a value. All log entries up to (but not including) FUO are considered stable and can be directly applied.

- slots[]: An array containing log entries in the format <proposal number, value>. The proposal number indicates the proposal under which the value (the actual log entry) was accepted. The proposal number may change but the value, once committed, can never change throughout the working of the algorithm.

7.3.2. Core Algorithm

Let's examine Listing 2, the core algorithm:

- Lines 13-15: As previously mentioned, a proposer continuously executes the two phases of Paxos - PREPARE and ACCEPT - until its proposed value is accepted into a log slot. The next log entry is targeted for the FUO position.

- Lines 18-24: The leader determines the highest proposal number it must use for the current FUO slot (based on its local FUO and knowledge of other nodes' minProposal). It then writes this proposal number to all confirmed followers. Simultaneously, it reads any existing value at that FUO slot on any of the followers. This mirrors the Paxos PREPARE phase, where acceptors acknowledge or return the current value of the target slot.

- Lines 25-28: These are critical. Following the PREPARE phase, if any follower returned a value for the FUO slot, the leader must use that same value in the subsequent ACCEPT phase. The leader then increments its FUO and retries the process for the next slot.

- Lines 31-33: If the FUO slot is found to be empty on all confirmed followers, the leader writes its proposed log entry to that position. If a race condition occurs (e.g., another leader has already written to that slot), the write operation will abort. The proposer then needs to retry for the next available slot.

- Lines 34-35: Importantly, the proposer only relinquishes its attempt when it has successfully written its own proposed value to some log slot.

7.3.3. Impact of Multiple Leaders on Consensus

Multiple leaders do not compromise the safety of the consensus in Mu. Paxos, by its design, prioritizes safety over guaranteed termination (as dictated by the

FLP impossibility result).

Consider a scenario with two nodes believing they are the leader. This can arise when a majority of followers grant write permission to a new leader while the previous leader still considers itself the leader. In this situation, the original leader will lose its write permission on a majority of nodes, which will share at least one node with the new majority. Consequently, the original leader will be unable to perform any writes – neither proposal numbers nor log entries. It can still read information and update its local state.

Indeed, the write permission to the replication plane memory region acts as a crucial safeguard, even without direct CPU involvement in the data transfer. This write permission is managed through mechanisms that do involve the host's CPU.

7.3.4. Catch-up Mechanism

New leaders and followers that have fallen behind in the log can catch up by reading the FUO value from all other nodes and then blindly applying all log entries up to the highest observed FUO to their local logs (as shown in Listings 3 and 4). Subsequently, they set their local FUO to this highest known value. In fact, any aspiring leader must perform this catch-up process before assuming the leadership role.

This mechanism works because if the FUO is incremented on even a single replica, the corresponding committed command will eventually be reflected on all other replicas. This is guaranteed by the monotonic nature of proposal numbers and the fundamental properties of Paxos. All log slots up to the FUO on every replica will always contain the exact same committed command.

7.3.5. Omitting the Prepare Phase

An optimization employed in Mu is the potential omission of the PREPARE phase immediately after a leader is elected. At this point, it's known that the leader has been granted write permission by a confirmed majority of followers. Therefore, the leader can directly attempt to write its value to the next available slot. The minProposal value is also already established. If the leader subsequently loses its leadership, this will be detected when its write attempt during the ACCEPT phase fails on the majority of nodes.

7.3.6. Follower Commit Read

Followers continuously poll the log memory region to read new entries. However, they need a mechanism to determine how far they can safely read. Since the leader doesn't directly update the FUO on follower nodes, followers rely on a canary byte embedded within each log entry. This canary byte serves two critical purposes:

- It acts as a marker, allowing followers to identify the beginning of a complete log entry.

- It ensures that a log entry is read only when the entire entry has been written. This is important because RDMA writes across the entire log entry memory region might not be a single atomic operation. Therefore, the leader writes the log entry in several steps and then atomically sets the canary byte to signal completion.

7.4. Background Plane

7.4.1. Leader Election

Let's ask a fundamental question: Does the replication algorithm described earlier guarantee safety, liveness, and fault tolerance? No. While it ensures safety and fault tolerance (inherent properties of Paxos), it does not guarantee liveness. This limitation is the same inherent challenge present in the Paxos protocol itself.

Following Leslie Lamport's approach with Paxos, Mu employs a leader to facilitate faster progress. Interestingly, even leader election in Mu is achieved through Paxos, by appending an entry to the distributed log. Consequently, leader election itself is also susceptible to the same liveness issues.

Despite this, the presence of a leader significantly optimizes the algorithm's speed. To enhance the probability of achieving liveness, the authors introduce randomness in the form of timeouts.

7.4.1.1. Timeout Implementation

Timeouts are implemented using a heartbeat mechanism. Each node maintains a local counter that increments periodically. This counter's value is written to a specific memory address within the background plane. Other nodes monitor these counters via RDMA reads. If a counter continuously increases, the corresponding node is considered alive.

The authors define two threshold values - failure and recovery - to determine if a node is considered dead or has recovered.

7.4.1.2. Impact on Correctness

Does this heartbeat mechanism affect the correctness of the consensus? No. Similar to standard Paxos, Mu remains inherently safe regardless of the number of active leaders. Timeouts are solely employed to introduce an element of randomness, aiming to ensure liveness with a high probability.

7.4.1.3. Miscellaneous Optimizations

The authors have implemented several optimizations to minimize latency. One notable effort involves the leader election thread relinquishing its leadership bid if it detects that the replication thread is stalled and not making progress.

7.4.2. Permission Management

Mu employs various strategies for managing permissions. Re-registering memory regions becomes increasingly expensive (e.g., 100 ms for a 4 GB region registration) as the memory region size grows, making it undesirable for maintaining low tail latency.

Instead, Mu primarily utilizes:

- Changing the access flags on the QP (fast path): This provides a quick way to modify access permissions.

- Re-initiating the QP (slow path): This operation resets the access to read-only and is used as a fallback mechanism when the fast path is insufficient.

The paper presents a compelling evaluation section that warrants closer examination.

8.1. Setup

Mu's performance is assessed in two primary deployment modes: standalone and attached.

In the standalone configuration, a single thread continuously calls the propose() function within a tight loop, isolating the core consensus mechanism. Conversely, the attached mode integrates Mu as a component within a complete end-user application.

The evaluation reveals that the standalone mode exhibits slightly superior performance compared to the attached mode. This advantage stems from the standalone mode's ability for the CPU to directly submit work items to the NIC with minimal overhead. In contrast, the attached mode introduces an intermediary layer, potentially hindering this direct interaction.

Within the attached mode, two sub-configurations are explored: direct and handover. In the direct mode, the application's primary thread is responsible for executing the replication logic. This allows for relatively direct communication with the NIC, bringing its performance closer to the standalone mode, though not identically. The slight performance difference arises because multi-threaded applications can introduce resource contention and the application thread might migrate across CPU cores, leading to cache misses.

The handover mode, on the other hand, offloads the actual replication tasks to a dedicated background thread, separate from the application's threads (potentially a shared replication thread for multiple application threads). This background thread runs on a different CPU than the applications' thread. Consequently, when this background thread needs to access common memory, cache coherence protocols are invoked to maintain data consistency, introducing a latency penalty of around 400 ns.

8.1.1. Systems Under Comparison

The evaluation benchmarks Mu against several other RDMA-based replication protocols, including

APUS,

Hermes, and

DARE. Additionally, it compares Mu's impact when integrated with applications requiring microsecond-level consensus, such as LiQ, Memcached, Redis, and HERD.

8.2. Results

8.2.1. Replication Latency Comparison

- Figure 3 demonstrates that the standalone mode consistently achieves the lowest replication latency across various payload sizes, outperforming all other evaluated modes within Mu.

- When compared to other replication systems (as shown in Figure 4), Mu integrated with Redis or Memcached exhibits superior performance, achieving p99 latencies of less than 2 μs.

8.2.2. End-to-End Latency Comparison

- Figure 5a illustrates that when Mu is integrated with LiQ, the replicated performance remains remarkably close to the unreplicated performance, introducing a minimal overhead of approximately 2 μs.

- Similarly, integration with HERD shows that the replicated performance closely mirrors the unreplicated performance. Notably, Mu-replicated demonstrates better performance and more stable tail latency compared to DARE-replicated.

- For key-value stores like Memcached and Redis, Mu integration leads to better overall performance, particularly in terms of tail latency.

8.2.3. Failover Time

The measured failover time is impressively low, at less than 1 ms. The majority of this time is attributed to failure detection. This suggests an aggressive polling mechanism for detecting node failures.

8.2.4. Throughput

To maximize throughput, Mu employs request batching. With a batch size of 128 requests, 8 concurrent outstanding requests (a queue depth of 8), and a 64-byte payload per request, the median observed latency per operation is 17 μs.

This translates to a calculated throughput of approximately 48 Gbps using the formula: (128 batch size * 8 outstanding requests * 64 bytes/request * 8 bits/byte * 2 replicas * 106 μs/s) / 17 μs.

As is typical with such systems, the latency remains relatively stable up to a certain throughput threshold. For Mu, this inflection point is around 45 operations per microsecond. The authors attribute this limitation to memory operations becoming the bottleneck for the replication thread beyond this point. Increasing the number of queued (outstanding) requests can potentially improve throughput but at the cost of increased latency due to longer queueing delays.

9. Paper Remarks

The paper is well-written, effectively establishing the necessary background before detailing the design. The evaluation is thorough, and the problem addressed carries broad applicability across numerous systems. For readers with a solid understanding of RDMA, the methodology is straightforward to follow.

← Previous InsightNext Insight →

Comments

Post a Comment