Moving beyond virtualization, we will now focus our attention on file systems - an essential subcomponent of the operating system that governs the logical organization and management of data on storage media. Over the next five papers, we will examine various types of file systems and explore the underlying mechanics of modern storage hardware.

This paper was authored by M. Rosenblum (co-founder of VMware) and J. Ousterhout in 1992. It explores Log-Structured File Systems (LFS). While LFS was previously considered obsolete, the rise of Solid State Drives (SSDs) has rekindled interest in its core principles, particularly the concept of immutability. Furthermore, modern file systems, such as RAID 5, incorporate principles from LFS. HP's commercial AutoRAID product, for example, is based on LFS.

In this insight, we will begin with the fundamentals - defining what a file is and how a file system integrates into the broader operating system. We will then discuss some basic file systems before conducting a deep dive into LFS.

1. File

A file is an ordered collection of bytes. Files can reside in various locations, such as on disk, in memory, or across a network. This article focuses on disk-based files.

The Von Neumann architecture can work with just processors and memory. However, the need for files arose from the desire for persistence. Files provide a mechanism to save the results of a program so they can be retrieved and used later, essentially preserving data across sessions.

Essentially File is also an interface exposing crucial APIs:

class File {

static void Open(char* path, char* mode);

void Read(void* buffer, int nbytes);

void PRead(int pos, void* buffer, int nbytes);

void Write(void* buffer, int nbytes);

void PWrite(int pos, void* buffer, int nbytes);

void Flush();

void Seek(int pos);

int Tell();

void Close();

};

When a program interacts with a file, it uses a File object, which maintains an internal cursor to track the current position within the file. The File interface typically provides the following methods:

- Open(path, mode): Opens the file at the given path in the specified mode. Common modes include "r" (read), "w" (write), and "a" (append), with variants like "w+" (create if it doesn't exist).

- Read(buffer, nbytes): Reads nbytes from the current position into buffer.

- PRead(pos, buffer, nbytes): Reads nbytes from the specified position into buffer.

- Write(buffer, nbytes): Writes nbytes from buffer at the current position.

- PWrite(pos, buffer, nbytes): Writes nbytes from buffer at the specified pos.

- Flush(): Forces all pending writes to disk, committing them.

- Seek(pos): Moves the cursor to the specified pos. Subsequent Read() and Write() operations will start from this new position.

- Tell(): Returns the current cursor position.

- Close(): Closes the file, releasing associated resources (including the File object itself).

The File interface is one of the most misused interfaces in Linux with several unconventional implementations. However, as long as the interface's contract is adhered to, such manipulations are sometimes acceptable for achieving performance gains.

2. File Inode

Files stored on disks are divided into equally-sized blocks. The inode data structure stores the locations of these blocks for each file.

Inode data structures vary across file system implementations. The most common approach is a table format, where entries are typically ordered by the file's block numbers. To prevent inode data structure overflow when files use an excessive number of blocks, inodes are often implemented as linked structures, as shown in illustration 1.

Apart from the location of blocks, inodes also store file attributes/metadata such as owner, group, mtime (last modified time), etc.

Illustration 1: Linked inode structure.

Note: Inodes themselves are also stored on disk.

Each inode includes a generation number, a unique identifier for each version of a file. If a file is deleted and recreated, the new file receives a distinct generation number, differentiating it from the previous instance.

3. Directories

Files are organized into directories. In BSD-based Linux systems, a directory is itself a special type of file. Crucially, a directory file contains entries <filename, inode> that represent the files and subdirectories within it.

3.1. Multi-Level Hash Tables

3.2. B-trees

4. Reading a File

The root directory has a known, fixed inode location, let's say l. To access data within the file /dir/file, the following steps are taken, as shown in illustration 4:

- The directory data of root directory is read to find the inode location of the dir directory.

- The dir directory's inode is retrieved to determine the disk block locations of the directory's contents.

- The directory entries are searched to find the inode location of file.

- The file inode is retrieved to determine the disk block locations of the file's data.

- The data blocks for file are fetched.

5. Writing to a File

When a file is written, several updates must be persisted to disk:

- The file's data is written to allocated file blocks (possibly new ones).

- The file's inode is updated to reflect any new data block locations and other file attributes (e.g., size, mtime, etc).

- If the file is new:

- A directory entry for the new file is created within its parent directory block.

- The parent directory's inode is updated. Creating a new file also updates the parent directory's modification time (mtime).

6. Storage Media

7. File System

A file system defines how files are organized and managed on storage media, specifically how file blocks are arranged on the disk.

Each file system has a superblock at a fixed location on disk that contains static configuration information about the file system including the type. A basic file system would store the root inode directly within the superblock. From there, the file system could navigate the entire directory tree to locate files.

Storage devices commonly support partitioning, a process that creates multiple logical volumes on a single physical disk. Each partition can host a distinct file system and utilize a defined subset of the disk's capacity. Partition details are stored within the superblock.

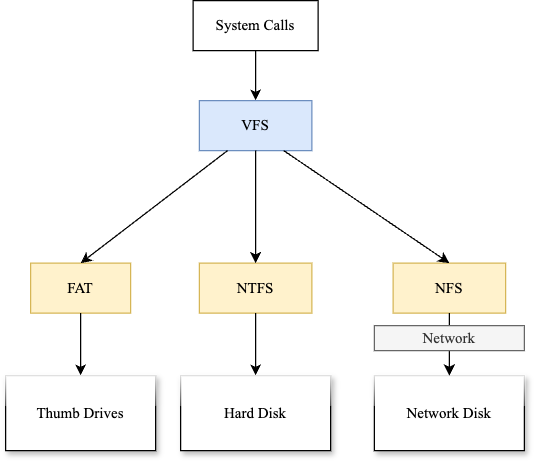

Several types of file systems exist, including:

- FAT (File Allocation Table)

- NTFS (New Technology File System) - a journaling file system

- ext4 (fourth extended file system) - another journaling file system

8. FAT File System

The File Allocation Table (FAT) is a relatively simple file system.

8.1. Disk Organization

A FAT-formatted disk is organized into three primary regions, as shown in illustration 5:

- FAT Region: This region stores several File Allocation Tables.

- Root Directory Region: This region holds the entries for the root directory.

- Data Region: This region contains the data blocks (clusters) for files and directories.

Illustration 5: Disk organization in FAT.

8.2. File Allocation Table

Files and directories are divided into fixed-size units called clusters. Clusters belonging to a file are linked together in a chain. The FAT itself is a table that contains entries for each cluster. Each entry indicates one of the following:

- The cluster number of the next cluster in the chain.

- A special End-of-Cluster (EOC) marker, indicating the end of the chain.

- A marker indicating a bad cluster.

- A zero, signifying an unused cluster.

The FAT functions similarly to an inode, residing in a fixed, dedicated region on the disk.

8.3. File Writes

Note that clusters for a file or directory are not necessarily contiguous. The clusters for a single file can be scattered across the disk. As a result, write operations in the FAT file system are generally random. This is because file data blocks, directory blocks, and FAT entries can be located at disparate positions on the disk.

8.4. FAT Variants

FAT has evolved through several variants:

- FAT12: Uses 12-bit cluster entries, supporting volumes of limited size (e.g., floppy disks).

- FAT16: Uses 16-bit cluster entries, allowing for larger volumes.

- FAT32: Uses 32-bit cluster entries, supporting significantly larger volumes and files.

8.5. Usage

The FAT file system, particularly FAT32, is widely used on:

- Removable storage devices (USB flash drives, SD cards) due to its broad compatibility.

- Embedded systems and devices with limited resources.

9. Journaling File System

Important note: Journaling is not a type of file system but a feature of a file system.

A journaling file system employs a journal (or log) in addition to the main file system. This journal records pending updates, which greatly aids in recovery after a system crash or power outage.

Common journaling file systems include ext4, ext3, JFS, BRTFS, NTFS, and HFS+.

Recommended Read: Paper Insights - Rethink the Sync to learn more about journaling file systems.

10. Role of Operating Systems

10.1. Everything is a File

The concept that everything is a file holds a significant place. This principle extends to terminals and hardware devices as well.

Terminals and hardware devices are represented as special files known as device files. These device files reside in the /dev directory. This means that the operating system interacts with terminals using the same file I/O operations (like read and write) that it uses for regular files.

Terminals are specifically classified as character devices because they handle data character by character. This is in contrast to block devices (like hard drives) that handle data in blocks.

When one interacts with a terminal, the following three files used:

- Standard input (stdin): Where the system receives input from the user.

- Standard output (stdout): Where the system displays output to the user.

- Standard error (stderr): Where the system displays error messages.

10.2. File Descriptor

When a process opens a file, it receives a file handle. This file handle contains a file descriptor.

The file descriptor is an integer that serves as an index into the process's file descriptor table. Each process maintains its own independent file descriptor table.

Unix-like systems define three standard file descriptors for each process:

- 0: stdin

- 1: stdout

- 2: stderr

Other file descriptors within the table may reference file inodes located on disk.

10.3. File & File System Commands

Beyond standard file operations like Open, Read, Write, Flush, and Close, and file system operations such as MkDir and Create, operating systems provide utilities for maintenance and data integrity.

fsck

fsck is a utility used in Unix-like operating systems (e.g., Linux) to examine and repair file system inconsistencies. It scans for errors, including corrupted data structures, bad blocks, and incorrect metadata. Its purpose is to ensure file system integrity and stability.fsync

Simply flushing a file (e.g., using a Flush() operation) may not guarantee immediate persistence to disk. Operating systems often optimize performance by initially writing data to in-memory buffers. The fsync command tells the operating system to write all these in-memory buffers to the physical storage device.sync

sync is similar to fsync but operates across all files. Additionally, the operating system will also instruct the disk buffers (described next) to be flushed. This action ensures that all pending write operations are truly committed to disk.11. Log-Structured File System

LFS treats the entire file system as a journal. LFS was developed in the 1990s, a time when:

- Disk technology was improving in cost and capacity, but not proportionally in transfer bandwidth and access time (shown in illustration 7). Disk seek time, in particular, was a major performance bottleneck. Hard drives rely on a physical read/write head, and the disk must rotate to position the head correctly. Numerous small writes can result in excessive disk seeks, significantly impacting performance. Because disks rotate in one direction, a missed seek requires a full rotation to return to the correct position.

- To mitigate the cost of disk seeks, disks often included large caches to buffer file writes, enabling more efficient block writes. However, buffered data is vulnerable to loss in the event of a system crash.

11.1. A Hint on External Consistency

Disk buffers are just one example of buffering at various levels:

- Program Buffer: The program itself uses a buffer in its memory. Write() operations initially store data in this buffer. Flush() typically transfers data from the program buffer to the operating system buffer.

- Operating System Buffer: The operating system also maintains a buffer to further optimize disk writes. Files often provide a Fsync() API to flush data from the operating system buffer to the disk.

- Disk Buffer: As mentioned earlier, the disk itself has a buffer. The sync command triggers a disk-level synchronization command to ensure all buffered writes are committed to the physical disk.

External consistency (or external sync) is making sure that the written bytes are on the disk before an externally visible event occurs.

Think about Flush(). One might expect data to hit the disk right away, but the operating system is often playing a smarter game for optimization. It might hold off till there is an externally visible event. Say, then, an email is sent. The operating system will then flush that file data before sending your message.

Recommended Read: Paper Insights - Rethink the Sync to learn more about how external consistency is ensured by operating systems.

11.2. Random Writes to Sequential Writes

Traditional file writing methods often require multiple disk seeks. For example, the paper asserts that a typical file system (FFS) uses five seeks to write a single file. This is because writes are often random, necessitating multiple physical movements of the disk head.

A key optimization strategy is to convert these random writes into sequential ones. The core idea is to buffer a series of write operations and then commit them to disk in a single, contiguous write. This sequential write requires only one disk seek, a significant improvement. This is precisely the approach taken by LFS.

11.3. Segmentation

The disk is organized into segments, and each segment is written sequentially from beginning to end. Segment size is around 512KB to 1 MB. A segment effectively acts as a write-ahead log, supporting sequential writes.

A segment is composed of blocks, which are the smallest units of data read from and written to the disk. Block sizes typically range from 512 B to 4 KB. The file blocks, directory blocks, inodes, and other new data structures are logged as blocks on the segments.

We will explore how segments are created in more detail shortly.

11.4. Writing to a File - A Straw Man

When a file is written:

- The file's data is written to allocated file blocks (possibly new ones), which are then buffered.

- The file's inode is updated to reflect the updated block locations within the log. This updated inode is also buffered.

- If the file is new:

- The directory block is updated to include an entry for the new file and buffered.

- The directory's inode is updated to reflect the new directory block location and buffered.

All these buffered updates are then written sequentially in one shot. This write operation translates to a small number of sequential disk writes, depending on how many segments are involved.

However, this initial approach presents a key challenge. Because file and directory inodes are now written to new locations within the log, it becomes necessary to efficiently locate them when needed. How to do that?

11.5. Inode Map

11.6. Reading a File

- The current directory's inode number is looked up in the inode map to get its on-disk address.

- The directory's inode is retrieved to find the location of its directory blocks.

- The directory blocks are read to find the inode number of the target file or subdirectory.

- Steps 1-3 needs to be repeated until the desired file's inode number is found.

- The file's inode number is looked up in the inode map to get its on-disk address.

- The file's inode is retrieved to determine the location of its data blocks.

- The file's data blocks are read.

11.7. Writing to a File

- The file's data is written to allocated file blocks (possibly new ones), and then buffered.

- The file's inode is updated to reflect the updated block locations within the log. This updated inode is also buffered.

- If the file is new:

- The directory block is updated to include an entry for the new file and buffered.

- The directory's inode is updated to reflect the new directory block location and buffered.

- The inode map is updated to reflect the new inode locations and buffer it.

- All buffered updates are then written sequentially to segments.

Illustration 8: Writing to a file in LFS

11.8. Immutability at Play

11.9. A Note on Locality

File system writes can exhibit two types of locality:

-

Logical Locality: This refers to the arrangement of file blocks contiguously on the disk. This allows the entire file content to be read sequentially in a single operation, regardless of the order in which the blocks were originally written. For example, a client might write to block 1 of a file, then write data to other files, and later write to block 2 of the same file. With logical locality, these blocks, though written out of order, are stored adjacent to each other on the disk, enabling sequential reads.

-

Temporal Locality: This refers to the arrangement of file blocks according to the order in which they were written. In the example above, blocks of the same file would be scattered across the disk, reflecting the order of writes. Reading the entire file would then require multiple, non-sequential (random) disk accesses.

LFS prioritizes temporal locality of writes, meaning blocks are written in the order they are received.

12. Segment Cleaning

Segments are not necessarily stored contiguously on disk; they can be threaded together, as shown in illustration 9.

As file blocks, updated inodes, and updated inode map blocks are written to new locations, the older versions become candidates for garbage collection.

Segment cleaning involves identifying a segment containing obsolete data and copying all the live (still in use) blocks within that segment to a new, active segment. This process frees up the original segment, making it available for new writes. Because writes to a segment must be sequential from beginning to end, clean segments are essential for continued operation.

The cleaning process selects M segments for cleaning and results in N clear segments, M > N.

12.1. Write Cost

Segment cleaning introduces overhead to the write process. The total write cost isn't just the expense of writing new log data; it also includes the cost of relocating live blocks during segment cleaning.

The authors provide a conservative estimate of this cost based on segment utilization (u) of live blocks. Consider N segments with an average utilization u:

- Reading the N segments costs N.

- Writing the live data out to new segments costs N*u.

- The cleaning process yields N*(1 - u) free space for new data.

The effective write cost is defined as the ratio of total disk reads and writes to the writes of new data only. This represents the write amplification factor.

Write cost = (N + N*u + N*(1-u)) / (N*(1-u)) = 2 / (1-u)

As segment utilization increases, so does the write cost. This formulation omits rotational latency and disk seek time, as these are considered negligible for the sequential reads and writes characteristic of log-structured file systems.

The formula shows that the write cost increases as utilization increases. For example, as shown in Figure 3, the write cost reaches 14 when segment utilization reaches 80%.

12.1.1. Optimizing Write Cost

Files are categorized into two groups based on write frequency:

- Hot files: The 10% of files that account for 90% of write operations.

- Cold files: The remaining 90% of files, which account for only 10% of writes.

During segment cleaning, blocks are sorted by age and re-written. Stable blocks (older, less frequently modified) are written to new segments first, followed by newer blocks. This sorting leads to a rough division of segments into two categories:

- Hot segments: These segments contain recently written data and are more likely to have blocks invalidated frequently.

- Cold segments: These segments contain stable, valid data blocks.

Hot segments are more likely to contain hot files, while cold segments are more likely to contain cold files.

Now, we will see how segments are selected for cleaning.

Approach 1: Greedy

One approach to segment cleaning is to use a fixed cleaning threshold. A segment is cleaned if its utilization falls below this predefined threshold.

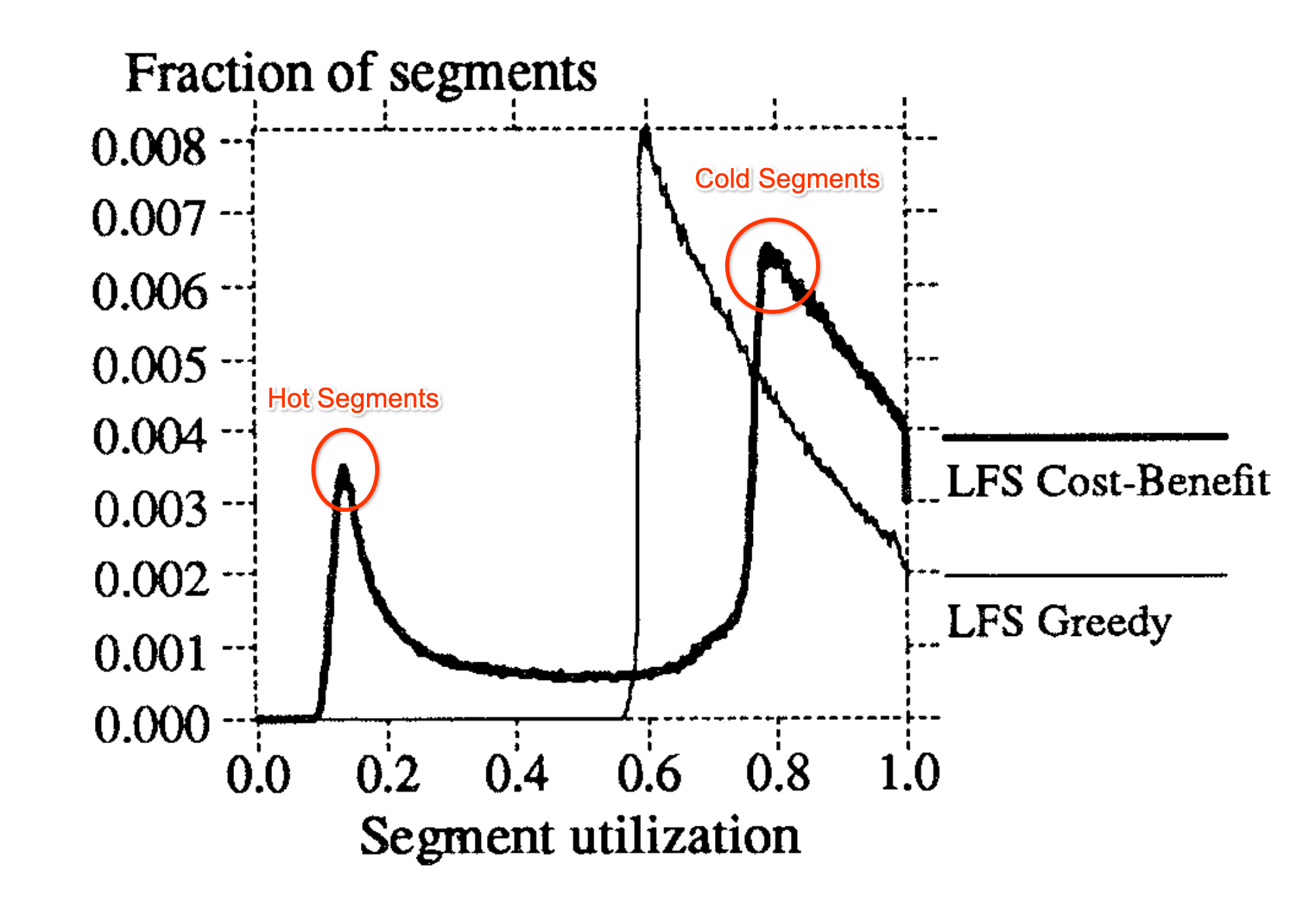

Figure 5: Copy of figure 5 from the paper.

Figure 5 illustrates this: below the cleaning threshold, there are no segments because those segments would have already been cleaned and freed. The curve slopes downward after the cleaning threshold, indicating that most hot segments tend to have lower utilization (just above the cleaning threshold).

The primary disadvantage of a fixed cleaning threshold is that the utilization of hot segments drops rapidly as data becomes obsolete (note that segment utilization only accounts for live blocks). These segments quickly reach the cleaning threshold and are cleaned frequently. This high cleaning frequency is inefficient.

Conversely, cold segments experience less rapid utilization decline. Once cleaned, they tend to remain stable and require less frequent cleaning.

When a cold segment is cleaned, its live blocks are likely moved to another cold segment, which itself will then be less likely to require immediate cleaning. However, when a hot segment is cleaned, its blocks are moved to another hot segment, which will likely also need cleaning soon.

Approach 2: Cost Benefit

We can model the benefit of cleaning a segment as a function of two factors:

- The amount of free space in the segment (1 - u). More free space translates to a greater benefit from cleaning.

- The "age" of the segment, defined as the time elapsed since the most recent modification of any block within that segment. Younger segments are less beneficial to clean.

The cost of cleaning a segment is 1 + u (1 represents the cost of reading the segment, and u represents the cost of rewriting the live blocks).

Therefore, the cost-benefit of cleaning a segment can be expressed as:

Cost-benefit = (1 - u) * age / (1 + u)

The cost-benefit approach results in a bimodal distribution of segment utilization (shown in figure 6): hot segments tend to have lower utilization, while cold segments have higher utilization.

The fixed cleaning threshold approach focused solely on maximizing free space (cleaning as much as possible). The cost-benefit approach, however, balances the cost of cleaning with the benefit of the resulting free space.

If cold segments have limited free space, the cost-benefit policy favors cleaning hot segments, even though they have a higher cleaning cost, to maximize the amount of free space gained. Conversely, if cold segments have sufficient free space, they are prioritized for cleaning due to their lower cleaning cost.

This policy achieves a good balance between cleaning cost and benefit. The write cost is typically between 2 and 4 when the overall utilization is around 80%.

12.2. Segment Usage Table

13. Checkpointing

The checkpoint region stores the locations of the inode map and the segment usage table. It also records the last checkpoint written to the log, which is crucial for recovery.

Unlike data blocks, inodes, and the inode map, the checkpoint region resides at a fixed location on the disk.

A checkpoint represents a point in the log where all file system structures are consistent and complete. Checkpoints are written to two distinct locations on disk for redundancy. This replication is essential because checkpoint loss would lead to file system corruption.

Replication also enables atomic checkpoint updates. To write a checkpoint:

- All checkpoint blocks are written.

- A timestamp is written atomically to a specific block.

Until the timestamp is successfully written, any partial checkpoint data is considered invalid. Upon system restart, the operating system selects the checkpoint with the highest (most recent) timestamp, ensuring that only complete and consistent checkpoints are used. This atomic timestamp update guarantees checkpoint integrity.

14. Roll Forward Recovery

Checkpoints are written every 30 seconds. Consequently, a crash might occur before the latest checkpoint is written, leaving up to 30 seconds of log data untracked. It's sometimes possible to recover some of this lost information through a process called roll-forward recovery.

14.1. Segment Summary

The segment summary block describes the contents of its associated segment, including file numbers (inode numbers) and offsets for each block within the segment. Critically, the segment summary block itself is written to the log, not stored at a fixed disk location.

The segment summary is essential for identifying all live (valid) blocks within a segment.

14.2. Directory Operation Log

A directory operation log, a special record within the log, captures each directory modification. This record contains an operation code, the location of the directory entry, the entry's content (name and inode number), and the updated reference count for the named inode. Like other log entries, the directory operation log is stored within the log itself.

Crucially, the directory operation log is written before the corresponding file inode or directory block. This ordering facilitates reference fixing during recovery.

14.3. Recovery Steps

The recovery process for an LFS involves the following steps:

-

The recovery program scans all segment summary blocks (by reading segments). When a summary block reveals a new inode, that inode's information is added to the inode map. The segment usage table is also updated based on the information in the segment summary blocks, reflecting the current state of each segment. If an inode for a file was never written, its associated data blocks are ignored during recovery.

-

Each inode maintains a reference count, indicating the number of directories referencing it. A key challenge is ensuring consistency between directory entries (filename - inode number mappings) and the newly recovered inodes. If a crash occurred after writing an inode but before updating its corresponding directory entry, the directory operation log comes into play. These logs are used to correct the contents of directory blocks, ensuring they accurately reflect the current state of files.

The recovery program then appends the modified directory blocks, their inodes, the file inodes, the updated inode map, and the updated segment usage table to the log. Finally, a new checkpoint region is written to include these changes.

Similar recovery processes exist in other file systems. This explains, in part, why the operating system boot process can take a noticeable amount of time.

15. Summary of Disk Organization in LFS

Apart from the superblock, checkpoint regions are located at the beginning and end of the disk, as shown in illustration 10.

The remaining disk space is divided into equal-sized segments, linked together in a list. Each segment contains blocks that can be classified as live (in use), obsolete (no longer needed), or free.

Segment cleaning is the process of identifying and consolidating live blocks to reclaim space. This concept is analogous to garbage collection in programming: Valid file/directory blocks are those reachable from the root, just as valid objects in a program are those reachable from root objects, stack globals, or the stack itself.

16. Summary of New Data Structures in LFS

Log-structured file systems introduce several key data structures:

- Inode map: Maps inode numbers to the inode's on-disk locations.

- Segment summary: Describes the contents of a segment, including file numbers and block offsets.

- Segment usage table: Tracks the amount of live data within each segment and the last write time for data in those segments.

- Checkpoint region*: Stores the locations of the inode map and segment usage table.

- Directory change log: Records directory operations to maintain consistency of inode reference counts.

17. Evaluation

The evaluation of LFS performance reveals the following:

-

Small File Performance: LFS demonstrates a significant performance advantage for small file operations, exhibiting up to a 10x speedup for new file writes. Reads are also accelerated due to the sequential arrangement of file blocks for multiple small files.

-

Large File Performance:

- Sequential writes to large files are faster in LFS. Sequential file writes in traditional file systems can lead to random disk writes due to inode and directory updates. Instead, LFS converts all writes to sequential operations.

- Random writes to large files are, as expected, considerably faster with LFS.

- Sequential and random reads of large files experience a performance degradation because LFS does not preserve logical locality after writes.

-

Cleaning Overhead: The observed write cost is significantly lower than predicted by simulations. Measured write costs ranged from 1.2 to 1.6, compared to the simulated range of 2.5 to 3. The bimodal distribution of segment utilization is highly skewed, with peaks at the extreme ends. This indicates the presence of many extremely cold segments that remain unmodified.

-

Recovery Time: Recovering 50,000 1 KB files required a maximum of 132 seconds. The recovery time increases by approximately one hour for every 70-second increase in the checkpoint interval.

18. Paper Remarks

This paper capitalized on the prevailing trend of the time, addressing the bottleneck of slow disk access speeds. This is a well-written paper with clever ideas, fundamentally based on the concept of immutability. It is highly recommended as an excellent introduction to file system concepts.

Comments

Post a Comment