We will now take a brief departure from database exploration to examine the world of decentralized systems. While "distributed" describes how components are physically spread out for efficiency, "decentralized" describes how power and control are shared among multiple independent parties. Note that while all decentralized systems are distributed, not all distributed systems are decentralized.

A primary example of this architecture is the InterPlanetary File System (IPFS). The original white paper, IPFS - Content Addressed, Versioned, P2P File System, described a fully decentralized system designed for the next generation of the internet, often referred to as Web3. However, achieving full decentralization has faced challenges due to significant performance-related issues. As a result, IPFS is currently evolving toward a hybrid model that incorporates elements of centralization to improve efficiency.

The specific paper discussed here was presented at the Networked Systems Design and Implementation (NSDI) '24 conference, a prestigious venue for distributed systems research.

In this insight, we will begin with the necessary background on the XOR trie, a data structure essential to the components described in the paper. Next, we will explore Kademlia Hash in detail, which is used extensively in IPFS. Following that, we will explore the decentralized web and the inner workings of IPFS in its most decentralized form. Finally, we will examine how the transition toward a more centralized, hybrid model is detailed within the paper.

Recommended Read: Paper Insights - Dynamo: Amazon's Highly Available Key-value Store where consistent hashing and its techniques were discussed.

1. XOR Trie

Illustration 1: Binary trie.

- All binary strings inserted into the trie must be of the same length.

- Both child branches of a node are either both empty (nil) or both non-empty.

- If both child branches are non-empty, the node itself has no key associated with it.

- If both child branches are leaf nodes, they both must have keys associated with them.

2. Kademlia Hash

Kademlia, developed by P. Maymounkov and D. Mazières in 2002, has gained prominence through its use in trackerless BitTorrent and currently serves as the Distributed Hash Table (DHT) for IPFS.

Kademlia arranges the virtual nodes into leaves of a full binary tree, as shown in illustration 4. Note that the same physical machine may be placed at multiple leaves of the tree in the form of virtual nodes.

2.1. XOR Properties

- The XOR distance between a node and itself is zero.

- Symmetry: The XOR distance between any two nodes (A and B) is the same regardless of the order (i.e., distance(A, B) = distance(B, A)).

- Triangle Inequality: For any three nodes (A, B, and C), the XOR distance between A and B is less than or equal to the sum of the XOR distances between A and C, and C and B.

2.2. Routing Algorithm

Each node is assigned a unique identifier (e.g., a hash value).

Each node maintains a routing table with entries based on its identifier's binary representation:

3. Decentralized Web

Traditional websites are like 1800 numbers - rely solely on the website owner to bear the costs of hosting and computation. In contrast, the decentralized web leverages a distributed network of nodes. This means that the computational burden, and therefore the associated costs, can be shared among numerous participants, regardless of the website's traffic load.

Decentralized web is popularly called Web3.

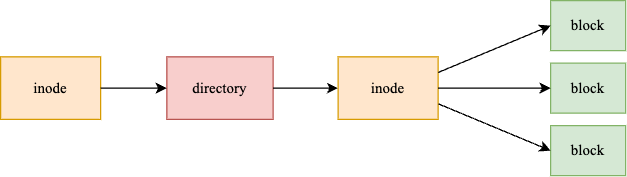

4. Decentralized File System

A decentralized file system represents each file block by its cryptographic hash. This hash uniquely identifies the block's content. Subsequently, these hashes are mapped to locations on a decentralized network of servers.

5. InterPlanetary File System

InterPlanetary File System (IPFS) is a decentralized file system that:

- Divides files into blocks.

- Generates a unique Content Identifier (CID) for each block using cryptographic hashing.

- Stores the mapping of CIDs to their locations on a DHT.

5.1. IPFS DHT Entries

Two key entries within the IPFS DHT are:

- Provider Records: Map CIDs to the PeerIDs of nodes that possess the corresponding blocks.

- Peer Records: Map PeerIDs to their respective Multiaddresses. Multiaddresses enable flexible p2p communication across various protocols (e.g., IPv4, IPv6, HTTP).

5.2. Node Discovery

While IPFS is a decentralized system, it relies on a set of special nodes known as bootstrap nodes. Bootstrap nodes play a crucial role in network discovery:

- They maintain a list of other nodes within the IPFS network.

- When an IPFS client starts, it connects to one or more bootstrap nodes to obtain a list of potential peers.

- The client then establishes connections with a subset of these peers, typically ranging from 500 to 1000 nodes.

This initial peer connectivity ensures that requests can be effectively routed and forwarded within the decentralized network.

5.3. Content Retrieval

Step 1: Local and Connected Peer Lookup

- Upon receiving requests for CIDs, the node first attempts to locate each block locally.

- If not found locally, it queries its connected peers using BitSwap (see 7.3) to see if any of them possess the desired blocks. This is called opportunistic lookup.

Step 2: DHT Lookup (Kademlia)

- If a block is not found among connected peers, the node initiates a DHT lookup using the Kademlia algorithm.

- This DHT lookup helps locate nodes within the network that are likely to have the requested block.

Step 3: BitSwap

- Want-List: The requesting node sends a list of desired CIDs (the "want-list") to its connected peers (for opportunistic lookup) or to the peer owning the block (found after DHT lookup).

- Block Sharing: Each node tracks the blocks its peers have requested. When a node receives a new block, it checks if any of its peers are seeking that specific block.

- Want-Have Responses:

- If a peer possesses a CID on the requesting node's want-list, it responds with a "want-have" message.

- If a peer does not have the CID, it responds with a "dont-have" message.

- Block Retrieval: Upon receiving a "want-have" response, the requesting node sends a "want-block" message to the peer, triggering the transfer of the requested block.

5.4. Content Caching

6. Challenges with Decentralized IPFS

The authors highlight several challenges in implementing a decentralized IPFS system:

1. Slow Data Publishing: The primary bottleneck in data publishing is the time-consuming process of replicating data records across 20 nodes in the DHT. The 95th percentile publishing time is a significant 66.73 seconds, primarily due to the overhead of DHT lookups to locate the 20 closest nodes for each CID. This slow publishing speed is unsuitable for real-time data streaming applications, although it may be acceptable for static data.

2. Slow Data Retrieval: Similar to publishing, data retrieval is also hindered by the time taken for DHT lookups. The average retrieval time is 4.42 seconds, which is considered too slow for many web3 applications that require rapid data access.

3. Complexity of Setup: Setting up and using IPFS currently requires a degree of technical expertise. Given that 58% of internet traffic originates from mobile devices and 92% of users access the web via smartphones, the complexity of IPFS setup presents a significant barrier to widespread adoption.

7. Towards Centralization

The authors propose a solution that introduces a degree of centralization to address the performance limitations of decentralized IPFS. It's crucial to emphasize that this centralization is limited in scope and focuses solely on performance improvements. The core decentralized nature of the IPFS network remains intact. Users can still bypass these centralized components if desired.

7.1. Centralized Components

- InterPlanetary Network Indexers: These accelerate content publication and retrieval by optimizing data indexing and lookup processes.

- Hydra Boosters: These specialized nodes improve performance by reliably hosting a large number of provider records, enhancing the efficiency of locating data within the network.

- HTTP Gateways: These gateways provide an HTTP interface to IPFS, facilitating easier integration and adoption by simplifying access for developers and applications.

The authors also performed a massive surgery on the IPFS client code to integrate all these centralized components.

8. InterPlanetary Network Indexers

InterPlanetary Network Indexers are high-performance key-value stores that efficiently index provider records. The key is CID. The value is the physical addresses of peers that possess the content, along with the protocols to be used. By offloading the storage of provider records from the DHT, Indexers significantly reduce network overhead and improve query performance.

Pebble (by Cockroach DB Labs), a high-performance key-value store, is used as the underlying data store.

8.1. Advertisement

To be visible on the network, a peer must advertise its available content to the Indexers. This is achieved through a chain of immutable advertisements – a data structure that records the publication or deletion of content by a given provider.

Providers maintain these advertisement chains locally. Information about these advertisements is disseminated to Indexers via gossip-based announcement messages. Indexers enable efficient bulk publishing of content availability information.

8.2. Client Queries

9. Hydra Boosters

Hydra introduces shortcuts within the IPFS routing space to improve performance. It consists of:

- Hydra Head Nodes: These nodes are strategically placed within the DHT itself.

- Shared Hydra Database: This database, implemented using Amazon DynamoDB, stores mappings between CIDs and peer addresses. Hydra Head nodes have access to this database.

9.1. Picking Node IDs for Hydra Heads

Hydra Heads are strategically placed within the DHT to ensure efficient routing. To do this, the PeerIDs of Hydra Heads are tracked in a XOR trie. When adding a new node:

- Two random node IDs are generated (power of two choices).

- The node ID that keeps the depth of the trie more regular is selected for the Hydra Head.

Consider load balancing requests across N symmetric servers.

-

Option 1 (Random): Distributing requests randomly across servers.

Option 2 (Two Choices): Selecting two random servers and routing the request to the server with the shorter queue.

-

Option 3 (Three Choices): Selecting three random servers and routing to the server with the lowest load.

10. HTTP Gateway

HTTP Gateways provide a bridge between the IPFS network and the standard HTTP protocol. These gateways leverage NGINX, a high-performance web server, to cache frequently accessed IPFS content. NGINX employs a Least Recently Used (LRU) cache replacement policy.

11. Evaluation

11.1. Indexer Performance

- The indexer currently stores a massive 173 billion records, a 100x increase compared to the DHT.

- This data is primarily contributed by 604 major publishers utilizing NFTs or dedicated storage services.

- The indexer handles 2.5k lookup requests per second, while 5k requests still rely on DHT lookups.

- Requires a significant capital investment ($10k) and monthly operating costs ($1k) per indexer.

- The indexer cache has a 65.22% hit rate, significantly accelerating lookups.

- Even for the 34.78% of requests that miss the cache, latency remains lower than direct DHT lookups.

11.2. Hydra Booster Performance

- Hydra boosters contribute to improved lookup times, although the benefits are not uniform across all regions. Regions like eu-central, already exhibiting good performance, did not experience significant improvements.

- The placement strategy effectively positions Hydra Heads, ensuring that 96.6% of peers have at least one Hydra Head within their 20-proximity.

- Lookup speed is improved by -3.3% to 36.5% with Hydra Boosters. Performance at the tail of the distribution is still comparable to direct DHT lookups.

- This proximity significantly reduces DHT hops, often to a single hop.

11.3. HTTP Gateway Performance

- Cache hits, with 50.3% hit rate, achieve a 24ms p95 latency.

- An additional 27.6% of requests are resolved by contacting the first node directly.

- For the remaining requests, performance may degrade slightly due to the extra hop introduced by the gateway servers.

12. Problems

This centralized approach presents several critical issues:

- Censorship Risk: Indexers can potentially facilitate censorship of content within the IPFS network.

- Hydra Booster Issues:

- The underlying Kademlia DHT is already vulnerable to malicious actors.

- Hydra Boosters, while intended to improve performance, exacerbate this vulnerability by enabling malicious nodes to strategically position themselves close to several other nodes.

- There are additional performance concerns.

- Indeed Hydra Boosters have now been turned down by IPFS.

- Gateway Security Weaknesses: HTTP gateways compromise the end-to-end cryptographic validation process, introducing security risks.

- False and slow advertisements can significantly degrade the user experience.

Comments

Post a Comment