The virtualization techniques discussed in previous insights were highly popular and widely adopted across data centers throughout the 2000s and 2010s. However, traditional operating systems are often bulky; running multiple instances on the same hardware proved costly, particularly for cloud providers. Consequently, more lightweight virtualization alternatives were developed to improve efficiency.

This paper, co-authored by Alexandru Agache and distinguished AWS scientist Marc Brooker, along with other researchers, was presented at the esteemed Networked Systems Design and Implementation (NSDI) '20 conference. It introduces Firecracker, a lightweight virtualization framework designed to host AWS Lambda, Amazon's serverless computing service.

In this insight, we will begin by establishing fundamental cloud computing concepts and introducing the specific tools utilized in the paper. We will then conduct a technical deep dive into Firecracker.

Recommended Read: Paper Insights - A Comparison of Software and Hardware Techniques for x86 Virtualization where several virtualization concepts were introduced.

1. Serverless Computing

Serverless computing is a cloud computing paradigm where the cloud provider dynamically allocates machine resources, especially compute, as needed. The cloud provider manages the underlying servers.

AWS offers a comprehensive serverless stack with a diverse range of products, including:

- API Gateway: For creating and managing APIs.

- Lambda: For executing serverless compute functions.

- Simple Storage Service (S3): For serverless object storage.

2. Virtualization

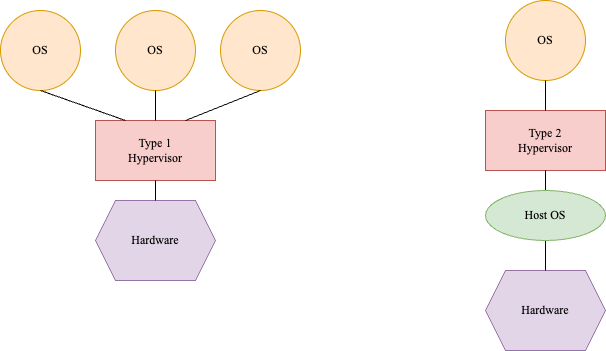

Virtualization is a technology that enables the creation of virtual resources from underlying machine resources. Broadly, there are two types of virtualization:

2.1. Full Virtualization

- Type 1 hypervisors: Run directly on bare-metal hardware, such as ESX Server.

- Type 2 hypervisors: Run on top of another operating system, such as VirtualBox.

Note: A hypervisor is also known as a Virtual Machine Monitor (VMM).

Illustration 1: Type 1 v/s Type 2 hypervisors.

- Binary translation (as in classical virtualization): Translates privileged guest instructions into safer alternatives on-the-fly. Some early x86 virtualization solutions relied heavily on binary translation. The hypervisor intercepted privileged instructions (like those accessing hardware directly) and dynamically translated them into safe instructions that could be executed within the virtual machine's context.

- Hardware-assisted virtualization: The CPU (e.g. Intel VT-x and AMD-V) itself provides virtualization support. It utilizes specialized CPU instructions to accelerate virtualization processes.

Both Type 1 and Type 2 hypervisors can support both techniques of full virtualization. Specifically, in binary translation, the core functionalities provided by the hypervisor are:

- Instruction translation: Guest operating system instructions are executed in non-privileged mode on the CPU. However, when a privileged instruction (e.g., accessing hardware directly) is encountered, it triggers a trap, transferring control to the hypervisor for translation.

- Page table translation: Guest operating system's page table entries are translated into the corresponding entries within the host operating system's page table.

Note: These core functionalities have numerous variations and optimizations across different hypervisor implementations.

2.2. OS-level Virtualization

- Hardware capabilities: CPU and network interface card (NIC) characteristics.

- Data: Access to files and folders.

- Peripherals: Connected devices like webcams, printers, and scanners.

Containers, however, restrict these system capabilities.

3. Linux Containers

Linux containers utilize OS-level virtualization techniques to provide application isolation. Key mechanisms enabling this isolation include:

- cgroups: Isolate and limit resource consumption (CPU, memory, I/O) for individual containers.

- namespaces: Create isolated environments for processes, network, and user ids.

- seccomp-bpf: Restrict the system calls available to a container, enhancing security.

- chroot: Isolate the container's file system view, limiting its access to specific parts of the host system's file system.

4. Serverless Computing v/s Containers

Serverless computing focuses on event-driven functions, where code executes in response to specific triggers (e.g., API calls, data changes).

Containers, on the other hand, package applications and their dependencies into self-contained units, providing consistent execution environments across different systems.

5. KVM and QEMU

KVM (Kernel-based Virtual Machine) is a Type 1 hypervisor that operates directly on the host hardware. It leverages hardware-assisted virtualization extensions like Intel VT-x and AMD-V. KVM is tightly integrated within the Linux kernel.

QEMU (Quick Emulator) is a versatile open-source software that emulates various hardware components, including CPUs, disks, and network devices.

When combined, KVM and QEMU create a powerful virtualization solution. QEMU provides the necessary I/O emulation layer, while KVM harnesses the host hardware's virtualization capabilities, resulting in high-performance virtual machines.

With that background in mind, let's jump into the details of the paper.

6. Introduction to Firecracker

Firecracker is a lightweight VMM that runs on top of KVM. It is designed for serverless computing.

Q. Why Firecracker doesn't use Linux containers (or other OS-level virtualization)?

Firecracker prioritizes strong security isolation over the flexibility offered by Linux containers. Virtualization, with its inherent hardware-level isolation, provides robust defense against a wider range of attacks, including sophisticated threats like side-channel attacks. Containers offer some isolation, but can still be vulnerable to these types of attacks.

Q. Why doesn't Firecracker use QEMU?

QEMU is bulky. Firecracker was designed to be lightweight and efficient, enabling higher server density and minimizing overhead for serverless functions.

7. Firecracker VMM

7.1. The KVM Layer

Firecracker leverages KVM for hardware-assisted virtualization, enhancing performance and security:

- All CPU instructions are directly executed by the KVM layer (with hardware-assistance).

- KVM also handles thread and process scheduling within the guest environment.

- KVM provides robust CPU isolation at the hardware level, preventing unauthorized access to host resources.

7.2. The VMM Layer

On top of KVM, instead of using the full-fledged QEMU, Firecracker employs a lightweight VMM implementation (re-using crosvm) that supports a limited set of essential devices:

- Serial ports for basic input/output.

- Network interfaces for communication.

- Block devices for storage.

Serial ports have a lightweight implementation, requiring less than 250 lines of code. All network and block I/O operations within Firecracker are trapped into the virtio. Virtio provides access to network and block devices via a serial API, which has a concise implementation of under 1400 lines of code.

7.3. Rate Limiters

CPU and memory resources are constrained by modifying the cpuid instruction within KVM, limiting the available cores and memory. This approach ensures a homogeneous compute fleet. However, it is significantly less sophisticated than Linux container's cgroup mechanism, which offers advanced features such as CPU credit scheduling, core affinity, scheduler control, traffic prioritization, performance events, and accounting.

Virtual network and block devices also incorporate in-memory token bucket-based rate limiters. These limiters control the receive/transmit rates for network devices and I/O operations per second (IOPS) for block devices.

8. Firecracker Internals (Figure 3)

Figure 3: Copy of figure 3 from the paper.

8.1. Firecracker Architecture

- MicroManager: The central component of Firecracker, responsible for spawning and managing VMs (or, as they call it, MicroVMs).

- Each VM is spawned as a process running on the KVM layer.

- This process itself has subcomponents:

- Slot: Contains the guest operating system kernel. Within the guest kernel, the lambda function runs as a user-space process. The lambda binary includes a λ-shim that facilitates communication with the MicroManager over TCP/IP.

- Firecracker VMM: Handles all virtual I/O operations.

8.2. Lambda Function

- Slot Affinity: Each lambda function is tightly bound to a specific slot (VM), ensuring consistent execution within the same environment.

- Event-Driven: Lambdas are designed to be event-driven, activating only when events (such as incoming requests or messages) arrive. This minimizes resource consumption during idle periods.

- Slot States:

- Idle: The VM is descheduled, consuming minimal CPU resources but retaining its memory state.

- Busy: The VM is actively processing events, utilizing both CPU and memory.

- Dead: The VM has been terminated and removed from the system.

This paper explores specific scheduling constraints for lambda functions within the Firecracker environment to enhance performance and resource utilization. These constraints are detailed in paper's section 4.1.1.

9. Multi-tenancy

A significant portion of lambda functions reside in an idle state, consuming approximately 40% of the available RAM. This characteristic can help in machine oversubscription.

The economic advantage of lambda functions stems from their ability to support multi-tenancy.

For example, consider a machine with 10GB of RAM capacity. If 10 lambda functions are scheduled on this machine, each allocated 5GB of capacity, and the compliance offering is 80% (guaranteeing at least 4GB of RAM to each lambda), the machine is oversubscribed fourfold.

Assuming all lambdas can acquire their required resources upon request, the efficiency of the system can be calculated as:

Efficiency = Total Allocated Capacity / Machine Capacity = 40GB / 10GB = 4x

This high level of efficiency contributes significantly to revenue generation.

10. Fast Boot time

The boot time for a Firecracker microVM is approximately 125ms.

Little's Law: Keep at least creation rate x creation latency slots ready.

For example, if the desired creation rate is 100 VMs per second and the boot time (creation latency) is 125ms (0.125s), the minimum number of ready VMs in the pool should be:

100 VMs/second * 0.125s = 12.5 VMs

Therefore, we need to maintain at least 13 ready VMs in the pool to accommodate the expected workload.

11. Evaluation

11.1. Memory Overhead

This analysis focuses solely on the memory consumption of the VMM process within each MicroVM, as this constitutes the primary overhead of the MicroVM. Given that all VMMs are initiated as processes, the code is loaded into shared memory segments and consequently shared across all VMMs. This static overhead is excluded from the evaluation. Therefore, the analysis only considers the sum of non-shared memory segments, as identified by the pmap command.

Firecracker demonstrates exceptional lightweight characteristics, not only in terms of binary size but also due to its minimal per-VM RAM overhead, which is remarkably low at 3 MB.

11.2. I/O Performance

Like QEMU, Firecracker VMM acts as an I/O emulator, handling all I/O operations within the virtual machine. This allows for software-level rate limiting of I/O traffic.

11.2.1. Block Device I/O Performance

Block devices (such as SSDs and HDDs) operate at block-level. In Firecracker VMM, the reads and writes to blocks are serialized. This serial access significantly impacts performance compared to QEMU or bare-metal systems (increased latency and reduced throughput).

Large-block writes are efficient but that is because of Firecracker's lack of flush-on-write. Without flush-on-write, all writes must be explicitly flushed to the underlying storage, introducing additional latency.

11.2.2. Network Device Performance

Emulated network devices within Firecracker also exhibit lower throughput compared to dedicated hardware.

12. Security

The paper discusses several types of attacks that can potentially affect Lambda functions:

-

Side-Channel Attacks: These attacks exploit unintended information leakage through channels other than the primary data flow.

- Cache Side-Channel Attacks: Multiple VMs might share the same physical cache. By analyzing cache access patterns, an attacker could potentially infer sensitive information, such as cryptographic keys.

- Timing Side-Channel Attacks: These attacks involve measuring the execution time of another process to glean information.

- Power Consumption Side-Channel Attacks: These attacks attempt to extract information by monitoring the power consumption of a target process.

-

Meltdown Attacks: These attacks exploit a race condition in modern CPUs that allows a process to bypass privilege checks and potentially read the memory of other processes. The race condition happens between memory access and privilege checking during instruction processing.

-

Spectre Attacks: These attacks use timing of speculative execution and branch prediction to infer sensitive data like cryptographic keys and passwords.

-

Zombieload Attacks: By carefully timing the execution of specific instructions, attackers can observe the side effects of "zombie loads" (a type of memory access) and potentially extract sensitive information.

-

Rowhammer Attacks: These attacks exploit a physical limitation of DRAM where repeated access to a specific memory row can cause unintended bit flips in neighboring rows, leading to data corruption.

The paper also provides the following remedies:

-

Disabling Simultaneous Multithreading (SMT a.k.a Hyperthreading): SMT allows multiple instruction streams to execute concurrently on a single physical core. Disabling SMT can help reduce the potential for one process to observe the activity of another, thereby mitigating certain types of side-channel attacks.

-

Kernel Page-Table Isolation (KPTI): KPTI isolates the kernel's page table from user-space page tables. This separation helps to prevent meltdown attacks, which exploit vulnerabilities in memory access control mechanisms.

-

Indirect Branch Prediction Barriers (IBPB): IBPB prevents previously executed code from influencing the prediction for future indirect branches. This helps to mitigate speculative execution attacks like Spectre.

-

Indirect Branch Restricted Speculation (IBRS): IBRS is a hardware-based mitigation technique that aims to restrict the impact of speculative execution on sensitive data.

-

Cache-Based Attacks: Techniques like Flush + Reload and Prime + Probe exploit cache sharing between processes. Mitigating these attacks often involves avoiding shared files and implementing appropriate memory access controls.

-

Disabling Swap: Disabling swap can help to reduce the attack surface by minimizing the amount of sensitive data that resides in volatile memory.

13. Paper Remarks

The paper is exceptionally dense, distilling numerous complex concepts into a concise format. It utilizes jargons common in cloud computing and cybersecurity, which may initially overwhelm readers unfamiliar with these domains. However, it serves as an excellent introduction to modern lightweight operating system implementations, demonstrating both application performance and industry-grade security.

Comments

Post a Comment